Import Data into Deep Network Designer

Note

Using Deep Network Designer to import data and train a network is not recommended.

To import and visualize training and validation data in Deep Network

Designer, use the legacy syntax deepNetworkDesigner("-v1").

Import Data

In Deep Network Designer, you can import image classification data from an image datastore or a folder containing subfolders of images from each class. Select an import method based on the type of datastore you are using.

Import ImageDatastore Object | Import Any Other Datastore Object (Not Recommended for ImageDatastore) |

|---|---|

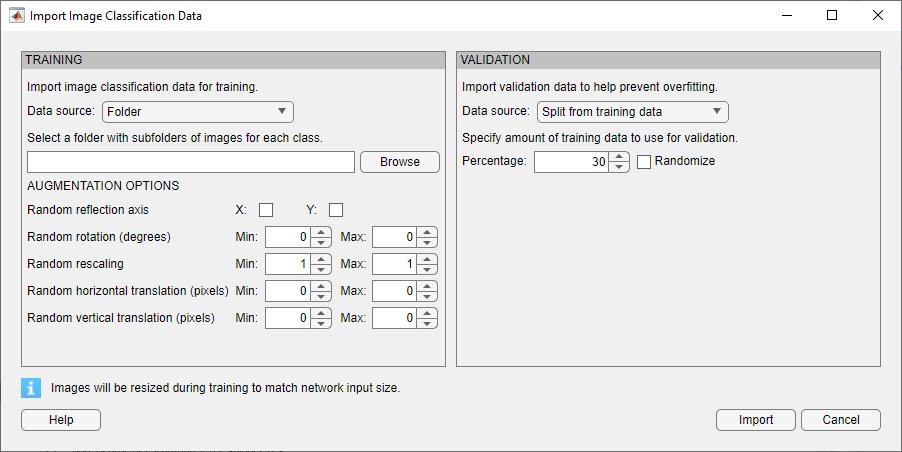

Select Import Data > Import Image Classification Data.

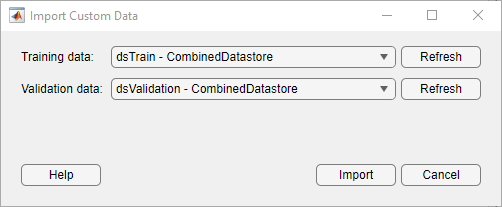

| Select Import Data > Import Custom Data.

|

Import Data by Task

| Task | Data Type | Data Import Method |

|---|---|---|

| Image classification | Folder with subfolders containing images for each class. The class labels are sourced from the subfolder names. | Select Import Data > Import Image Classification Data. |

For example, create an image datastore containing digits data. dataFolder = fullfile(toolboxdir('nnet'),'nndemos', ... 'nndatasets','DigitDataset'); imds = imageDatastore(dataFolder, ... 'IncludeSubfolders',true, ... 'LabelSource','foldernames'); | ||

For example, create an augmented image datastore containing digits data. dataFolder = fullfile(toolboxdir('nnet'),'nndemos', ... 'nndatasets','DigitDataset'); imds = imageDatastore(dataFolder, ... 'IncludeSubfolders',true, ... 'LabelSource','foldernames'); imageAugmenter = imageDataAugmenter( ... 'RandRotation',[1,2]); augimds = augmentedImageDatastore([28 28],imds, ... 'DataAugmentation',imageAugmenter); augimds = shuffle(augimds); | Select Import Data > Import Custom Data. | |

| Semantic segmentation | For example, combine an

dataFolder = fullfile(toolboxdir('vision'), ... 'visiondata','triangleImages'); imageDir = fullfile(dataFolder,'trainingImages'); labelDir = fullfile(dataFolder,'trainingLabels'); imds = imageDatastore(imageDir); classNames = ["triangle","background"]; labelIDs = [255 0]; pxds = pixelLabelDatastore(labelDir,classNames,labelIDs); cds = combine(imds,pxds); | Select Import Data > Import Custom Data. |

| Image-to-image regression | For example, combine noisy input images and pristine output images to create data suitable for image-to-image regression. dataFolder = fullfile(toolboxdir('nnet'),'nndemos', ... 'nndatasets','DigitDataset'); imds = imageDatastore(dataFolder, ... 'IncludeSubfolders',true, .... 'LabelSource','foldernames'); imds = transform(imds,@(x) rescale(x)); imdsNoise = transform(imds,@(x) {imnoise(x,'Gaussian',0.2)}); cds = combine(imdsNoise,imds); cds = shuffle(cds); | Select Import Data > Import Custom Data. |

| Regression | Create data suitable for training regression networks by combining array datastore objects. [XTrain,~,YTrain] = digitTrain4DArrayData; ads = arrayDatastore(XTrain,'IterationDimension',4, ... 'OutputType','cell'); adsAngles = arrayDatastore(YTrain,'OutputType','cell'); cds = combine(ads,adsAngles); | Select Import Data > Import Custom Data. |

| Sequences and time series | To input the sequence

data from the datastore of predictors to a deep learning network,

the mini-batches of the sequences must have the same length. You can

use the For example, pad the sequences to all be the same length as the longest sequence. [XTrain,YTrain] = japaneseVowelsTrainData;

XTrain = padsequences(XTrain,2);

adsXTrain = arrayDatastore(XTrain,'IterationDimension',3);

adsYTrain = arrayDatastore(YTrain);

cdsTrain = combine(adsXTrain,adsYTrain);To reduce the amount of padding, you can use a transform datastore and a helper function. For example, pad the sequences so that all the sequences in a mini-batch have the same length as the longest sequence in the mini-batch. You must also use the same mini-batch size in the training options. [XTrain,TTrain] = japaneseVowelsTrainData; miniBatchSize = 27; adsXTrain = arrayDatastore(XTrain,'OutputType',"same",'ReadSize',miniBatchSize); adsTTrain = arrayDatastore(TTrain,'ReadSize',miniBatchSize); tdsXTrain = transform(adsXTrain,@padToLongest); cdsTrain = combine(tdsXTrain,adsTTrain); function data = padToLongest(data) sequence = padsequences(data,2,Direction="left"); for n = 1:numel(data) data{n} = sequence(:,:,n); end end You can also reduce the amount of padding by sorting your data from shortest to longest and reduce the impact of padding by specifying the padding direction. For more information about padding sequence data, see Sequence Padding and Truncation. You can also import sequence data using a custom datastore object. For an example showing how to create a custom sequence datastore, see Train Network Using Custom Mini-Batch Datastore for Sequence Data. | Select Import Data > Import Custom Data. |

| Other extended workflows (such as numeric feature input, out-of-memory data, image processing, and audio and speech processing) | Datastore For other extended workflows, use a

suitable datastore object. For example, custom datastore, For example, create a

dataFolder = fullfile(toolboxdir('images'),'imdata'); imds = imageDatastore(dataFolder,'FileExtensions',{'.jpg'}); dnds = denoisingImageDatastore(imds,... 'PatchesPerImage',512,... 'PatchSize',50,... 'GaussianNoiseLevel',[0.01 0.1]); For

table array data, you must convert your data into a suitable

datastore to train using Deep Network Designer. For example, start

by converting your table into arrays containing the predictors and

responses. Then, convert the arrays into | Select Import Data > Import Custom Data. |

Image Augmentation

For image classification problems, Deep Network Designer provides simple augmentation options to apply to the training data. Open the Import Image Classification Data dialog box by selecting Import Data > Import Image Classification Data. You can select options to apply a random combination of reflection, rotation, rescaling, and translation operations to the training data.

To perform more general and complex image preprocessing operations than those offered by Deep

Network Designer, use TransformedDatastore

and CombinedDatastore

objects. To import CombinedDatastore and

TransformedDatastore objects, select Import Data > Import Custom Data.

For more information on image augmentation, see Preprocess Images for Deep Learning.

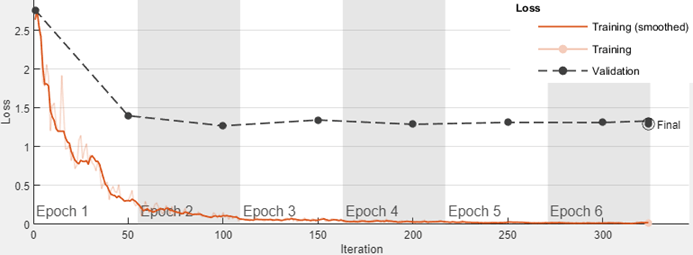

Validation Data

In Deep Network Designer, you can import validation data to use during training. You can monitor validation metrics, such as loss and accuracy, to assess if the network is overfitting or underfitting and adjust the training options as required. For example, if the validation loss is much higher than the training loss, then the network might be overfitting.

In Deep Network Designer, you can import validation data:

From a datastore in the workspace.

From a folder containing subfolders of images for each class (image classification data only).

By splitting a portion of the training data to use as validation data (image classification data only). The data is split into validation and training sets once, before training. This method is called holdout validation.

Split Validation Data from Training Data

When splitting the holdout validation data from the training data, Deep Network Designer splits a percentage of the training data from each class. For example, suppose you have a data set with two classes, cat and dog, and choose to use 30% of the training data for validation. Deep Network Designer uses the last 30% of images with the label "cat" and the last 30% with the label "dog" as the validation set.

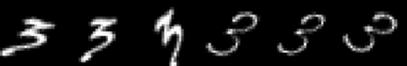

Rather than using the last 30% of the training data as validation data, you can choose to randomly allocate the observations to the training and validation sets by selecting the Randomize check box in the Import Image Data dialog box. Randomizing the images can improve the accuracy of networks trained on data stored in a nonrandom order. For example, the digits data set consists of 10,000 synthetic grayscale images of handwritten digits. This data set has an underlying order in which images with the same handwriting style appear next to each other within each class. An example of the display follows.

See Also

Deep Network Designer | TransformedDatastore | CombinedDatastore | imageDatastore | augmentedImageDatastore | splitEachLabel