Compare Agents on the Discrete Pendulum Swing-Up Environment

This example shows how to create and train frequently used default agents on a discrete action space pendulum swing-up environment. This environment represents a simple frictionless pendulum that initially hangs in a downward position. The agent can apply a control torque on the pendulum, and its goal is to make the pendulum stand upright using minimal control effort. The example plots performance metrics such as the total training time and the total reward for each trained agent. The results that the agents obtain in this environment, with the selected initial conditions and random number generator seed, do not necessarily imply that specific agents are in general better than others. Also, note that the training times depend on the computer and operating system you use to run the example, and on other processes running in the background. Your training times might differ substantially from the training times shown in the example.

Fix Random Number Stream for Reproducibility

The example code might involve computation of random numbers at various stages. Fixing the random number stream at the beginning of various sections in the example code preserves the random number sequence in the section every time you run it, and increases the likelihood of reproducing the results. For more information, see Results Reproducibility.

Fix the random number stream with seed zero and random number algorithm Mersenne Twister. For more information on controlling the seed used for random number generation, see rng.

previousRngState = rng(0,"twister")previousRngState = struct with fields:

Type: 'twister'

Seed: 0

State: [625×1 uint32]

The output previousRngState is a structure that contains information about the previous state of the stream. You will restore the state at the end of the example.

Discrete Action Space Pendulum Swing-Up Model

The reinforcement learning environment for this example is a simple frictionless pendulum that initially hangs in a downward position. The agent can apply a control torque on the pendulum, and its goal is to make the pendulum stand upright using minimal control effort.

Open the model.

mdl = "rlSimplePendulumModel";

open_system(mdl)

In this model:

The balanced, upright pendulum position is zero radians, and the downward hanging pendulum position is

piradians (the pendulum angle is counterclockwise-positive).The torque (counterclockwise-positive) action can be one of three possible values: –2, 0, or 2 Newtons per meter.

The observations from the environment are the sine of the pendulum angle, the cosine of the pendulum angle, and the pendulum angle derivative.

The reward , provided at every timestep, is

Here:

is the angle of displacement from the upright position.

is the derivative of the displacement angle.

is the control effort from the previous time step.

For more information on this model, see Load Predefined Control System Environments.

Create Environment Object

Create a predefined environment object for the pendulum.

env = rlPredefinedEnv("SimplePendulumModel-Discrete")env =

SimulinkEnvWithAgent with properties:

Model : rlSimplePendulumModel

AgentBlock : rlSimplePendulumModel/RL Agent

ResetFcn : []

UseFastRestart : on

To define the initial condition of the pendulum as hanging downward, specify an environment reset function using an anonymous function handle. This reset function sets the model workspace variable theta0 to pi.

env.ResetFcn = @(in)setVariable(in,"theta0",pi,"Workspace",mdl);

Reset the environment.

reset(env);

For more information on Simulink® environments and their reset functions, see SimulinkEnvWithAgent.

Specify the agent sample time Ts and the simulation time Tf in seconds.

Ts = 0.05; Tf = 20;

Get the observation and action specification information from the environment.

obsInfo = getObservationInfo(env)

obsInfo =

rlNumericSpec with properties:

LowerLimit: -Inf

UpperLimit: Inf

Name: "observations"

Description: [0×0 string]

Dimension: [3 1]

DataType: "double"

actInfo = getActionInfo(env)

actInfo =

rlFiniteSetSpec with properties:

Elements: [3×1 double]

Name: "torque"

Description: [0×0 string]

Dimension: [1 1]

DataType: "double"

Configure Training and Simulation Options for All Agents

Set up an evaluator object to evaluate the agent ten times without exploration every 100 training episodes.

evl = rlEvaluator(NumEpisodes=10,EvaluationFrequency=100);

Create a training options object. For this example, use the following options.

Run the training for a maximum of 1000 episodes, with each episode lasting 500 time steps.

Stop the training when the agent receives an average cumulative reward greater than –1100 over five consecutive evaluation episodes. At this point, the agent can quickly balance the pendulum in the upright position using minimal control effort.

To have a better insight on the agent's behavior during training, plot the training progress (default option). If you want to achieve faster training times, set the

Plotsoption tonone.

trainOpts = rlTrainingOptions(... MaxEpisodes=1000,... MaxStepsPerEpisode=500,... StopTrainingCriteria="EvaluationStatistic",... StopTrainingValue=-1100,... Plots="training-progress");

For more information on training options, see rlTrainingOptions.

To simulate the trained agent, create a simulation options object and configure it to simulate for 500 steps.

simOptions = rlSimulationOptions(MaxSteps=500);

For more information on simulation options, see rlSimulationOptions.

Create, Train, and Simulate a DQN Agent

The constructor functions initialize the agent networks randomly. Ensure reproducibility of the section by fixing the seed used for random number generation.

rng(0,"twister")Create a default rlDQNAgent object using the environment specification objects.

dqnAgent = rlDQNAgent(obsInfo,actInfo);

To ensure that the RL Agent block in the environment executes every Ts seconds instead of the default setting of one second, set the SampleTime property of dqnAgent.

dqnAgent.AgentOptions.SampleTime = Ts;

Set a lower learning rate and a lower gradient threshold to promote a smoother (though possibly slower) training.

dqnAgent.AgentOptions.CriticOptimizerOptions.LearnRate = 1e-3; dqnAgent.AgentOptions.CriticOptimizerOptions.GradientThreshold = 1;

Use a larger experience buffer to store more experiences, therefore decreasing the likelihood of catastrophic forgetting.

dqnAgent.AgentOptions.ExperienceBufferLength = 1e6;

Train the agent, passing the agent, the environment, and the previously defined training options and evaluator objects to train. Training is a computationally intensive process that takes several minutes to complete. To save time while running this example, load a pretrained agent by setting doTraining to false. To train the agent yourself, set doTraining to true.

doTraining =false; if doTraining % Train the agent. Record the training time. tic dqnTngRes = train(dqnAgent,env,trainOpts,Evaluator=evl); dqnTngTime = toc; % Extract the number of training episodes and the number of total steps. dqnTngEps = dqnTngRes.EpisodeIndex(end); dqnTngSteps = sum(dqnTngRes.TotalAgentSteps); % Uncomment to save the trained agent and the training metrics. % save("dpsuBchDQNData.mat", ... % "dqnAgent","dqnTngEps","dqnTngSteps","dqnTngTime") else % Load the pretrained agent and the metrics for the example. load("dpsuBchDQNData.mat", ... "dqnAgent","dqnTngEps","dqnTngSteps","dqnTngTime") end

For the DQN Agent, the training converges to a solution after 200 episodes. You can check the trained agent within the pendulum swing-up environment.

Ensure reproducibility of the simulation by fixing the seed used for random number generation.

rng(0,"twister")Configure the agent to use a greedy policy (no exploration) in simulation.

dqnAgent.UseExplorationPolicy = false;

Simulate the environment with the trained agent for 500 steps and display the total reward. For more information on agent simulation, see sim.

experience = sim(env,dqnAgent,simOptions);

dqnTotalRwd = sum(experience.Reward)

dqnTotalRwd = -754.0534

The trained DQN agent is able to swing up and stabilize the pendulum upright.

Create, Train, and Simulate a PG Agent

The constructor functions initialize the agent networks randomly. Ensure reproducibility of the section by fixing the seed used for random number generation.

rng(0,"twister")Create a default rlPGAgent object using the environment specification objects.

pgAgent = rlPGAgent(obsInfo,actInfo);

To ensure that the RL Agent block in the environment executes every Ts seconds instead of the default setting of one second, set the SampleTime property of pgAgent.

pgAgent.AgentOptions.SampleTime = Ts;

Set a lower learning rate and a lower gradient threshold to promote a smoother (though possibly slower) training.

pgAgent.AgentOptions.CriticOptimizerOptions.LearnRate = 1e-3; pgAgent.AgentOptions.ActorOptimizerOptions.LearnRate = 1e-3; pgAgent.AgentOptions.CriticOptimizerOptions.GradientThreshold = 1; pgAgent.AgentOptions.ActorOptimizerOptions.GradientThreshold = 1;

Set the entropy loss weight to increase exploration.

acAgent.AgentOptions.EntropyLossWeight = 0.005;

Train the agent, passing the agent, the environment, and the previously defined training options and evaluator objects to train. Training is a computationally intensive process that takes several minutes to complete. To save time while running this example, load a pretrained agent by setting doTraining to false. To train the agent yourself, set doTraining to true.

doTraining =false; if doTraining % Train the agent. Record the training time. tic pgTngRes = train(pgAgent,env,trainOpts,Evaluator=evl); pgTngTime = toc; % Extract the number of training episodes and the number of total steps. pgTngEps = pgTngRes.EpisodeIndex(end); pgTngSteps = sum(pgTngRes.TotalAgentSteps); % Uncomment to save the trained agent and the training metrics. % save("dpsuBchPGData.mat", ... % "pgAgent","pgTngEps","pgTngSteps","pgTngTime") else % Load the pretrained agent and the metrics for the example. load("dpsuBchPGData.mat", ... "pgAgent","pgTngEps","pgTngSteps","pgTngTime") end

For the PG Agent, the training does not converge to any solution after 1000 episodes. You can check the trained agent within the pendulum swing-up environment.

Ensure reproducibility of the simulation by fixing the seed used for random number generation.

rng(0,"twister")Configure the agent to use a greedy policy (no exploration) in simulation.

pgAgent.UseExplorationPolicy = false;

Simulate the environment with the trained agent for 500 steps and display the total reward. For more information on agent simulation, see sim.

experience = sim(env,pgAgent,simOptions);

pgTotalRwd = sum(experience.Reward)

pgTotalRwd = -1.8815e+03

The trained PG agent is not able to swing up the pendulum.

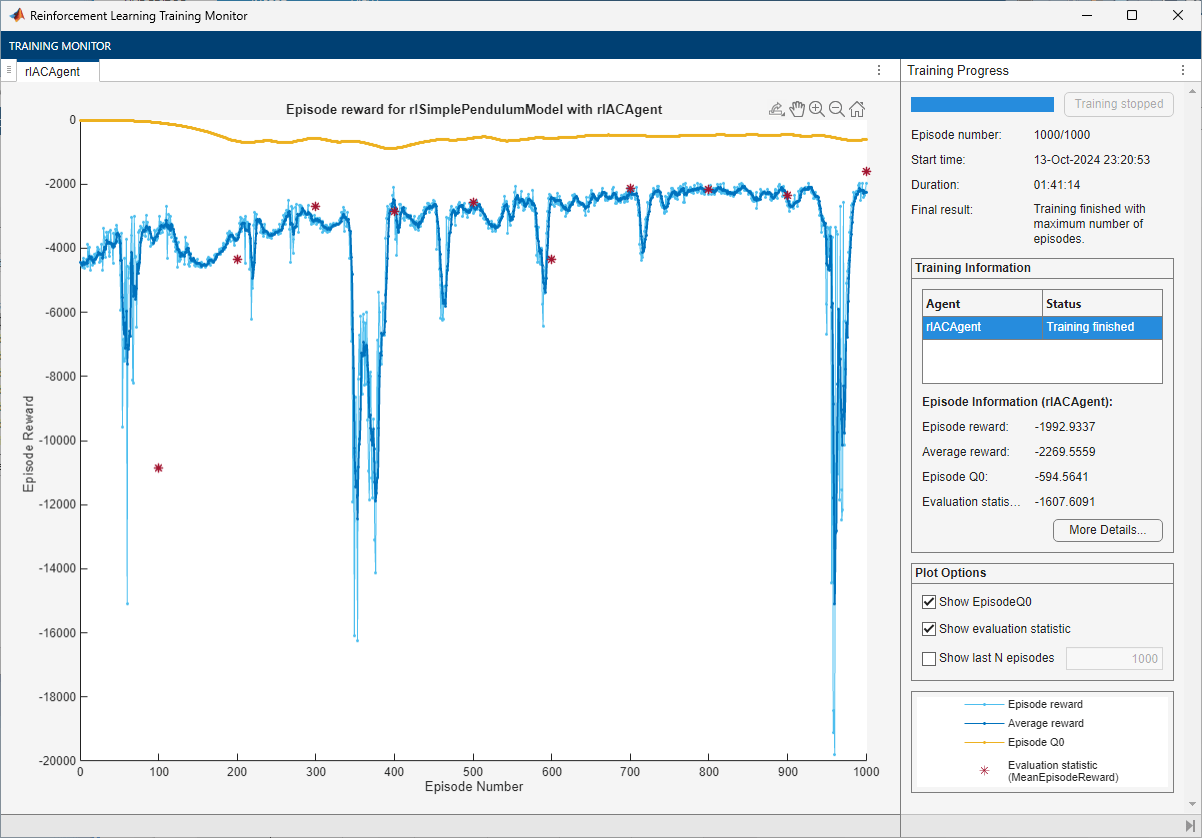

Create, Train, and Simulate an AC Agent

The constructor functions initialize the agent networks randomly. Ensure reproducibility of the section by fixing the seed used for random number generation.

rng(0,"twister")Create a default rlACAgent object using the environment specification objects.

acAgent = rlACAgent(obsInfo,actInfo);

To ensure that the RL Agent block in the environment executes every Ts seconds instead of the default setting of one second, set the SampleTime property of acAgent.

acAgent.AgentOptions.SampleTime = Ts;

Set a lower learning rate and a lower gradient threshold to promote a smoother (though possibly slower) training.

acAgent.AgentOptions.CriticOptimizerOptions.LearnRate = 1e-3; acAgent.AgentOptions.ActorOptimizerOptions.LearnRate = 1e-3; acAgent.AgentOptions.CriticOptimizerOptions.GradientThreshold = 1; acAgent.AgentOptions.ActorOptimizerOptions.GradientThreshold = 1;

Set the entropy loss weight to increase exploration.

acAgent.AgentOptions.EntropyLossWeight = 0.005;

Train the agent, passing the agent, the environment, and the previously defined training options and evaluator objects to train. Training is a computationally intensive process that takes several minutes to complete. To save time while running this example, load a pretrained agent by setting doTraining to false. To train the agent yourself, set doTraining to true.

doTraining =false; if doTraining % Train the agent. Record the training time. tic acTngRes = train(acAgent,env,trainOpts,Evaluator=evl); acTngTime = toc; % Extract the number of training episodes and the number of total steps. acTngEps = acTngRes.EpisodeIndex(end); acTngSteps = sum(acTngRes.TotalAgentSteps); % Uncomment to save the trained agent and the training metrics. % save("dpsuBchACData.mat", ... % "acAgent","acTngEps","acTngSteps","acTngTime") else % Load the pretrained agent and the metrics for the example. load("dpsuBchACData.mat", ... "acAgent","acTngEps","acTngSteps","acTngTime") end

For the AC agent, as for the PG agent, the training does not converge to a solution after 1000 episodes. However, the evaluation score of the agent seemed to be steadily improving, which suggests that training the AC agent for longer might yield a trained agent able to stabilize the pendulum upright. You can check the trained agent within the pendulum swing-up environment.

Ensure reproducibility of the simulation by fixing the seed used for random number generation.

rng(0,"twister")Configure the agent to use a greedy policy (no exploration) in simulation.

acAgent.UseExplorationPolicy = false;

Simulate the environment with the trained agent for 500 steps and display the total reward. For more information on agent simulation, see sim.

experience = sim(env,acAgent,simOptions);

acTotalRwd = sum(experience.Reward)

acTotalRwd = -1.7411e+03

The trained AC agent is able to swing up the pendulum to some extent, however, it does not stabilize it in the upright position.

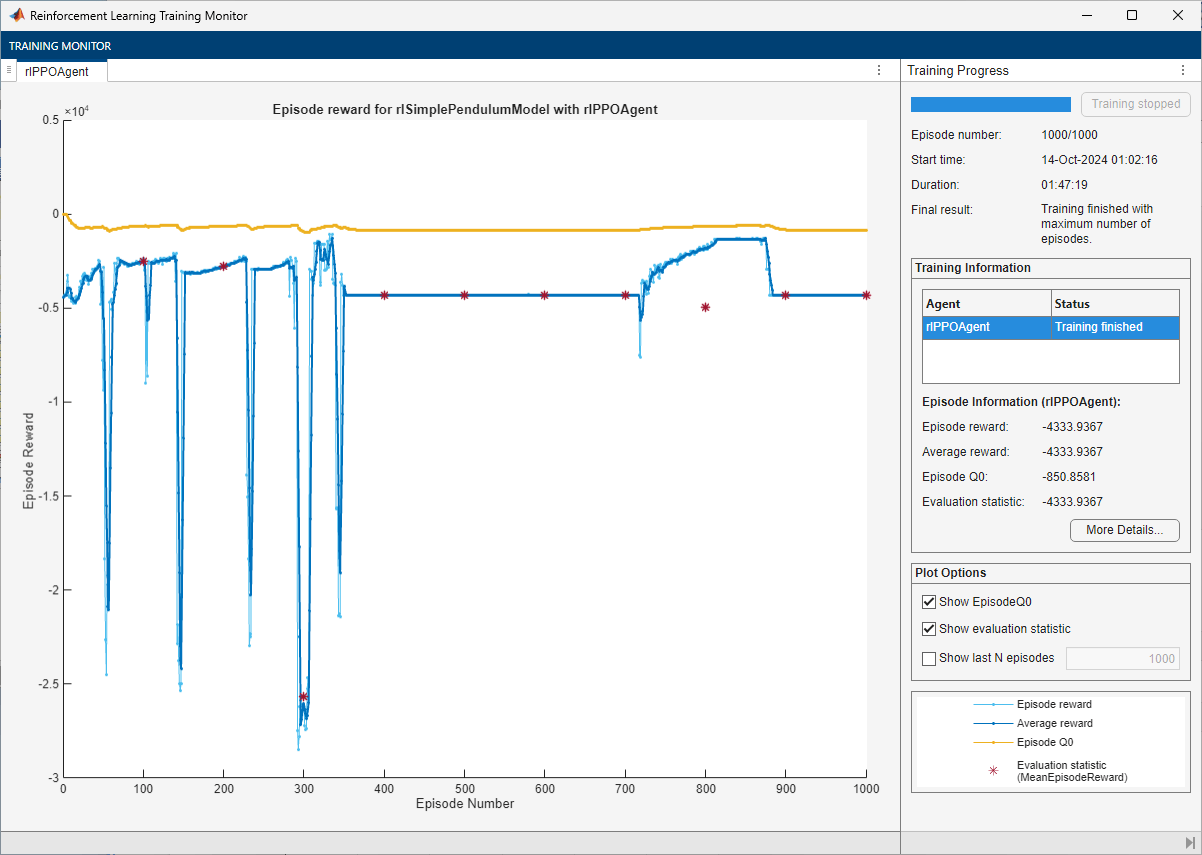

Create, Train, and Simulate a PPO Agent

The constructor functions initialize the agent networks randomly. Ensure reproducibility of the section by fixing the seed used for random number generation.

rng(0,"twister")Create a default rlPPOAgent object using the environment specification objects.

ppoAgent = rlPPOAgent(obsInfo,actInfo);

To ensure that the RL Agent block in the environment executes every Ts seconds instead of the default setting of one second, set the SampleTime property of ppoAgent.

ppoAgent.AgentOptions.SampleTime = Ts;

Set a lower learning rate and a lower gradient threshold to promote a smoother (though possibly slower) training.

ppoAgent.AgentOptions.CriticOptimizerOptions.LearnRate = 1e-3; ppoAgent.AgentOptions.ActorOptimizerOptions.LearnRate = 1e-3; ppoAgent.AgentOptions.CriticOptimizerOptions.GradientThreshold = 1; ppoAgent.AgentOptions.ActorOptimizerOptions.GradientThreshold = 1;

Train the agent, passing the agent, the environment, and the previously defined training options and evaluator objects to train. Training is a computationally intensive process that takes several minutes to complete. To save time while running this example, load a pretrained agent by setting doTraining to false. To train the agent yourself, set doTraining to true.

doTraining =false; if doTraining % Train the agent. Record the training time. tic ppoTngRes = train(ppoAgent,env,trainOpts,Evaluator=evl); ppoTngTime = toc; % Extract the number of training episodes and the number of total steps. ppoTngEps = ppoTngRes.EpisodeIndex(end); ppoTngSteps = sum(ppoTngRes.TotalAgentSteps); % Uncomment to save the trained agent and the training metrics. % save("dpsuBchPPOData.mat", ... % "ppoAgent","ppoTngEps","ppoTngSteps","ppoTngTime") else % Load the pretrained agent and results for the example. load("dpsuBchPPOData.mat", ... "ppoAgent","ppoTngEps","ppoTngSteps","ppoTngTime") end

For the PPO agent, as for the PG and AC agents, the training does not converge to a solution after 1000 episodes. You can check the trained agent within the pendulum swing-up environment.

Ensure reproducibility of the simulation by fixing the seed used for random number generation.

rng(0,"twister")Configure the agent to use a greedy policy (no exploration) in simulation.

ppoAgent.UseExplorationPolicy = false;

Simulate the environment with the trained agent for 500 steps and display the total reward. For more information on agent simulation, see sim.

experience = sim(env,ppoAgent,simOptions);

ppoTotalRwd = sum(experience.Reward)

ppoTotalRwd = -4.3339e+03

The trained PPO agent is not able to swing up the pendulum.

Create, Train, and Simulate a SAC Agent

The constructor functions initialize the agent networks randomly. Ensure reproducibility of the section by fixing the seed used for random number generation.

rng(0,"twister")Create a default rlACAgent object using the environment specification objects.

sacAgent = rlSACAgent(obsInfo,actInfo);

To ensure that the RL Agent block in the environment executes every Ts seconds instead of the default setting of one second, set the SampleTime property of ppoAgent.

sacAgent.AgentOptions.SampleTime = Ts;

Set a lower learning rate and a lower gradient threshold to promote a smoother (though possibly slower) training.

sacAgent.AgentOptions.CriticOptimizerOptions(1).LearnRate = 1e-3; sacAgent.AgentOptions.CriticOptimizerOptions(2).LearnRate = 1e-3; sacAgent.AgentOptions.CriticOptimizerOptions(1).GradientThreshold = 1; sacAgent.AgentOptions.CriticOptimizerOptions(2).GradientThreshold = 1; sacAgent.AgentOptions.ActorOptimizerOptions.LearnRate = 1e-3; sacAgent.AgentOptions.ActorOptimizerOptions.GradientThreshold = 1;

Set the initial entropy weight and target entropy to increase exploration.

sacAgent.AgentOptions.EntropyWeightOptions.EntropyWeight = 5e-3; sacAgent.AgentOptions.EntropyWeightOptions.TargetEntropy = 5e-1;

Use a larger experience buffer to store more experiences, therefore decreasing the likelihood of catastrophic forgetting.

sacAgent.AgentOptions.ExperienceBufferLength = 1e6;

Train the agent, passing the agent, the environment, and the previously defined training options and evaluator objects to train. Training is a computationally intensive process that takes several minutes to complete. To save time while running this example, load a pretrained agent by setting doTraining to false. To train the agent yourself, set doTraining to true.

doTraining =false; if doTraining % Train the agent. Record the training time. tic sacTngRes = train(sacAgent,env,trainOpts,Evaluator=evl); sacTngTime = toc; % Extract the number of training episodes and the number of total steps. sacTngEps = sacTngRes.EpisodeIndex(end); sacTngSteps = sum(sacTngRes.TotalAgentSteps); % Uncomment to save the trained agent and the training metrics. % save("dpsuBchSACData.mat", ... % "sacAgent","sacTngEps","sacTngSteps","sacTngTime") else % Load the pretrained agent and results for the example. load("dpsuBchSACData.mat", ... "sacAgent","sacTngEps","sacTngSteps","sacTngTime") end

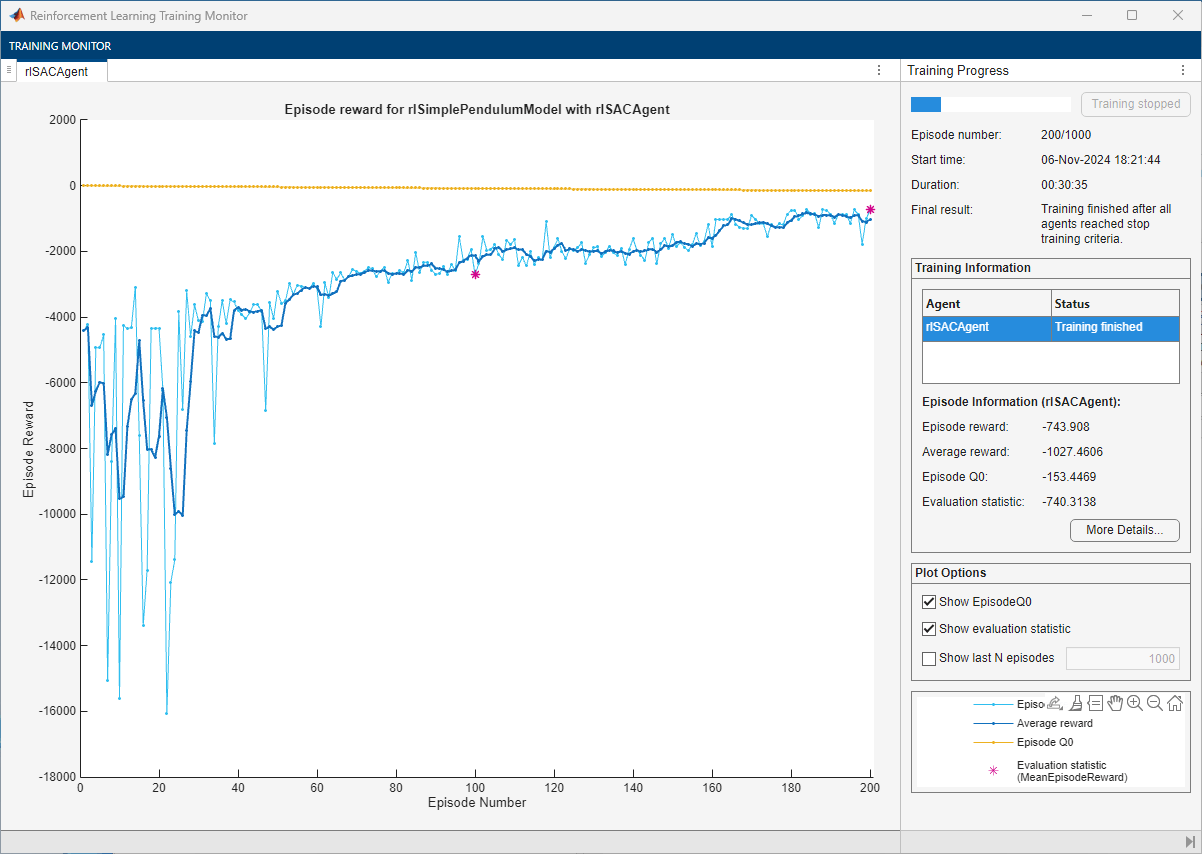

For the SAC agent, the training converges to a solution after 200 episodes.You can check the trained agent within the pendulum swing-up environment.

Ensure reproducibility of the simulation by fixing the seed used for random number generation.

rng(0,"twister")Configure the agent to use a greedy policy (no exploration) in simulation.

sacAgent.UseExplorationPolicy = false;

Simulate the environment with the trained agent for 500 steps. For more information on agent simulation, see rlSimulationOptions and sim.

experience = sim(env,sacAgent,simOptions);

sacTotalRwd = sum(experience.Reward)

sacTotalRwd = -740.3138

The trained SAC agent is able to swing up and stabilize the pendulum upright.

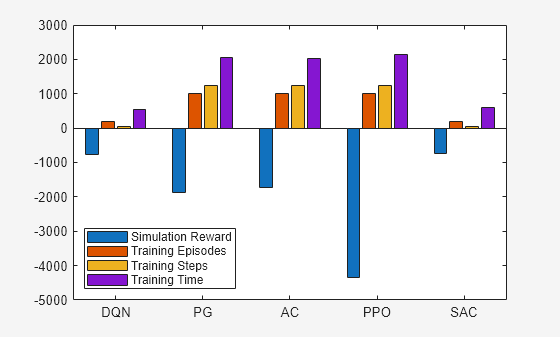

Plot Training and Simulation Metrics

For each agent, collect the total reward from the final simulation episode, the number of training episodes, the total number of agent steps, and the total training time as shown in the Reinforcement Learning Training Monitor.

simReward = [

dqnTotalRwd

pgTotalRwd

acTotalRwd

ppoTotalRwd

sacTotalRwd

];

tngEpisodes = [

dqnTngEps

pgTngEps

acTngEps

ppoTngEps

sacTngEps

];

tngSteps = [

dqnTngSteps

pgTngSteps

acTngSteps

ppoTngSteps

sacTngSteps

];

tngTime = [

dqnTngTime

pgTngTime

acTngTime

ppoTngTime

sacTngTime

];Plot the simulation reward, number of training episodes, number of training steps (that is the number of interactions between the agent and the environment) and the training time. Scale the data by the factor [1 1 2e5 3] for better visualization.

bar([simReward,tngEpisodes,tngSteps,tngTime]./[1 1 2e5 3]) xticklabels(["DQN" "PG" "AC" "PPO" "SAC"]) legend( ... ["Simulation Reward", ... "Training Episodes", ... "Training Steps", ... "Training Time"], ... "Location", "southwest")

Only the trained DQN and SAC agents are able to stabilize the pendulum. With a different random seed, the initial agent networks would be different, and therefore, convergence results might be different. For more information on the relative strengths and weaknesses of each agent, see Reinforcement Learning Agents.

Save all the variables created in this example, including the training results, for later use.

% Uncomment to save all the workspace variables % save dpsuAllVariables.mat

Restore the random number stream using the information stored in previousRngState.

rng(previousRngState);