Assess Requirements-Based Testing for ISO 26262

You can use the Model Testing Dashboard to assess the quality and completeness of your requirements-based testing activities in accordance with ISO 26262-6:2018. The dashboard facilitates this activity by monitoring the traceability between requirements, tests, and test results and by providing a summary of testing completeness and structural coverage. The dashboard analyzes the implementation and verification artifacts in a project and provides:

Completeness and quality metrics for the requirements-based tests in accordance with ISO 26262-6:2018, Clause 9.4.3

Completeness and quality metrics for the requirements-based test results in accordance with ISO 26262-6:2018, Clause 9.4.4

A list of artifacts in the project, organized by the units

To assess the completeness of your requirements-based testing activities, follow these automated and manual review steps using the Model Testing Dashboard. For information on how to enforce low complexity and size in your design artifacts, see Assess Model Size and Complexity for ISO 26262.

Open the Model Testing Dashboard and Collect Metric Results

To analyze testing artifacts using the Model Testing Dashboard:

Open a project that contains your models and testing artifacts. Or to load an example project for the dashboard, in the MATLAB® Command Window, enter:

openExample("slcheck/ExploreTestingMetricDataInModelTestingDashboardExample"); openProject("cc_CruiseControl");

Open the dashboard. To open the Model Testing Dashboard, use one of these approaches:

On the Project tab, click Model Testing Dashboard.

At the MATLAB command line, enter:

modelTestingDashboard

In the Artifacts panel, the dashboard organizes artifacts such as requirements, tests, and test results under the units that they trace to. To view the metric results for the unit

cc_DriverSwRequestin the example project, in the Project panel, click cc_DriverSwRequest. The dashboard collects metric results and populates the widgets with the metric data for the unit.Note

If you do not specify the models that are considered units, then the Model Testing Dashboard considers a model to be a unit if it does not reference other models. You can control which models appear as units and components by labeling them in your project and configuring the Model Testing Dashboard to recognize the labels. For more information, see Specify Models as Components and Units.

The dashboard widgets summarize the traceability and completeness measurements for

the testing artifacts for each unit. The metric results displayed with the red

Non-Compliant overlay icon ![]() indicate issues that you might need to address to

complete requirements-based testing for the unit. Results are compliant if they show

full traceability, test completion, or model coverage. To see the compliance

thresholds for a metric, point to the overlay icon. To explore the data in more

detail, click an individual metric widget. For the selected metric, a table displays

the artifacts and the metric value for each artifact. The table provides hyperlinks

to open the artifacts so that you can get detailed metric results and fix the

artifacts that have issues. For more information about using the Model Testing

Dashboard, see Explore Status and Quality of Testing Activities Using Model Testing Dashboard.

indicate issues that you might need to address to

complete requirements-based testing for the unit. Results are compliant if they show

full traceability, test completion, or model coverage. To see the compliance

thresholds for a metric, point to the overlay icon. To explore the data in more

detail, click an individual metric widget. For the selected metric, a table displays

the artifacts and the metric value for each artifact. The table provides hyperlinks

to open the artifacts so that you can get detailed metric results and fix the

artifacts that have issues. For more information about using the Model Testing

Dashboard, see Explore Status and Quality of Testing Activities Using Model Testing Dashboard.

Test Review

To verify that a unit satisfies its requirements, you create tests for the unit based on the requirements. ISO 26262-6, Clause 9.4.3 requires that tests for a unit are derived from the requirements. When you create a test for a requirement, you add a traceability link between the test and the requirement, as described in Link Requirements to Tests (Requirements Toolbox) and in Establish Requirements Traceability for Testing (Simulink Test). Traceability allows you to track which requirements have been verified by your tests and identify requirements that the model does not satisfy. Clause 9.4.3 requires traceability between requirements and tests, and review of the correctness and completeness of the tests. To assess the correctness and completeness of the tests for a unit, use the metrics in the Test Analysis section of the Model Testing Dashboard.

The following is an example checklist provided to aid in reviewing test correctness and completeness with respect to ISO 26262-6. For each question, perform the review activity using the corresponding dashboard metric and apply the corresponding fix. This checklist is provided as an example and should be reviewed and modified to meet your application needs.

| Checklist Item | Review Activity | Dashboard Metric | Fix |

|---|---|---|---|

| 1 — Does each test trace to a requirement? | Check that 100% of the tests for the unit are linked to requirements by viewing the widgets in the Tests Linked to Requirements section. | Tests Linked to Requirements

Metric ID —

For more information, see Test Case with Requirement Distribution. | For each unlinked test, add a link to the requirement that the test verifies, as described in Fix Requirements-Based Testing Issues. |

| 2 — Does each test trace to the correct requirements? | For each test, manually verify that the requirement it is linked to is correct. Click the Tests with Requirements widget to view a table of the tests. To see the requirements that a test traces to, in the Artifacts column, click the arrow to the left of the test name. | Tests Linked to Requirements

Metric ID —

For more information, see Test Case with Requirement. | For each link to an incorrect requirement, remove the link. If the test is missing a link to the correct requirement, add the correct link. |

| 3 — Do the tests cover all requirements? | Check that 100% of the requirements for the unit are linked to tests by viewing the widgets in the Requirements Linked to Tests section. | Requirements Linked to Tests

Metric ID —

For more information, see Requirements with Tests Distribution. | For each unlinked requirement, add a link to the test that verifies it, as described in Fix Requirements-Based Testing Issues. |

| 4 — Do the test cases define the expected results including pass/fail criteria? | Manually review the test cases of each type. Click the widgets in the Tests by Type section to view a table of the test cases for each type: Simulation, Equivalence, and Baseline. Open each test case in the Test Manager by using the hyperlinks in the Artifact column. Baseline test cases must define baseline criteria. For simulation test cases, review that each test case defines pass/fail criteria by using assessments, as described in Assess Simulation and Compare Output Data (Simulink Test). | Tests by Type

Metric ID —

For more information, see Test Case Type. | For each test case that does not define expected results, click the hyperlink in the Artifact column to open the test case in the Test Manager, then add the expected test definition and pass/fail criteria. |

| 5 — Does each test properly test the requirement that it traces to? | Manually review the requirement links and content for each test. Click the Tests with Requirements widget to view a table of the tests. To see the requirements that a test traces to, in the Artifact column, click the arrow to the left of the test name. Use the hyperlinks to open the test and requirement and review that the test properly tests the requirement. | Tests Linked to Requirements

Metric ID —

For more information, see Test Case with Requirement. | For each test that does not properly test the requirement it traces to, click the hyperlink in the Artifact column to open the test in the Test Manager, then update the test. Alternatively, add tests that further test the requirement. |

Test Results Review

After you run tests on a unit, you must review the results to check that the tests executed, passed, and sufficiently tested the unit. Clause 9.4.4 in ISO 26262-6:2018 requires that you analyze the coverage of requirements for each unit. Check that each of the tests tested the intended model and passed. Additionally, measure the coverage of the unit by collecting model coverage results in the tests. To assess the testing coverage of the requirements for the unit, use the metrics in the Simulation Test Result Analysis section of the Model Testing Dashboard.

The following checklist is provided to facilitate test results analysis and review using the dashboard. For each question, perform the review activity using the corresponding dashboard metric and apply the corresponding fix. This checklist is provided as an example and should be reviewed and modified to meet your application needs.

| Checklist Item | Review Activity | Dashboard Metric | Fix |

|---|---|---|---|

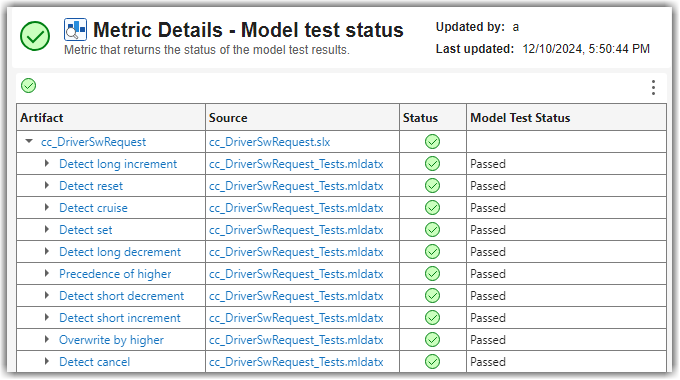

| 1 — Does each test result trace to a test? | Use only test results that appear in the dashboard. Test results that do not trace to a test do not appear in the dashboard. Click a widget in the Model Test Status section to view a table of the tests and the results that trace to them. | Model Test Status

Metric ID —

For more information, see Model Test Status Distribution. | Open the metric details and click the hyperlink in the Artifacts column to open the test in the Test Manager. Re-run the tests that the results should trace to and export the new results. |

| 2 — Does each test trace to a test result? | Check that zero tests are untested and zero tests are disabled. | Model Test Status

Metric ID —

For more information, see Model Test Status Distribution. | For each disabled or untested test, in the Test Manager, enable and run the test. |

| 3 — Have all tests been executed? | Check that zero tests are untested and zero tests are disabled. | Model Test Status

Metric ID —

For more information, see Model Test Status Distribution. | For each disabled or untested test, in the Test Manager, enable, and run the test. |

| 4 — Do all tests pass? | Check that 100% of the tests for the unit passed. | Model Test Status

Metric ID —

For more information, see Model Test Status Distribution. | For each test failure, review the failure in the Test Manager and fix the corresponding test or design element in the model. |

| 5 — Do all test results include coverage results? | Manually review each test result in the Test Manager to check that it includes coverage results. | Not applicable | For each test result that does not include coverage, open the test in the Test Manager, and then enable coverage collection. Run the test again. |

| 6 — Were the required structural coverage objectives achieved for each unit? | Check that the tests achieved 100% model coverage for the coverage types that your unit testing requires. To determine the required coverage types, consider the safety level of your software unit and use table 9 in clause 9.4.4 of ISO 26262-6:2018. | Model Coverage

Metric ID —

For more information, see Model Coverage Breakdown. | For each design element that is not covered, analyze to determine the cause of the missed coverage. Analysis can reveal shortcomings in tests, requirements, or implementation. If appropriate, add tests to cover the element. Alternatively, add a justification filter that justifies the missed coverage, as described in Create, Edit, and View Coverage Filter Rules (Simulink Coverage). |

| 7 — Does all of the overall achieved coverage come from requirements-based tests? | Check that 100% of the overall achieved coverage comes from requirements-based tests. | View the metric results in the Achieved Coverage Ratio subsection of the dashboard. Click the widgets under Requirements-Based Tests for information on the source of the overall achieved coverage for each coverage type.

For the associated metric IDs, see Requirements-Based Tests. | For any overall achieved coverage that does not come from requirements-based tests, add links to the requirements that the tests verify. |

| 8 — Does the overall achieved coverage come from tests that properly test the unit? | Manually review the content for each test. Check the percentage of the overall achieved coverage that comes from unit-boundary tests. | View the metric results in the Achieved Coverage Ratio subsection of the dashboard. Click the widgets under Unit-Boundary Tests for information on the source of the overall achieved coverage for each coverage type.

For the associated metric IDs, see Unit-Boundary Tests. | For any overall achieved coverage that does not come from unit-boundary tests, either add a test that tests the whole unit or reconsider the unit-model definition. |

| 9 — Have shortcomings been acceptably justified? | Manually review coverage justifications. Click a bar in the Model Coverage section to view a table of the results for the corresponding coverage type. To open a test result in the Test Manager for further review, click the hyperlink in the Artifacts column. | Model Coverage

Metric ID —

For more information, see Model Coverage Breakdown. | For each coverage gap that is not acceptably justified, update the justification of missing coverage. Alternatively, add tests to cover the gap. |

Unit Verification in Accordance with ISO 26262

The Model Testing Dashboard provides information about the quality and completeness of your unit requirements-based testing activities. To comply with ISO 26262-6:2018, you must also test your software at other architectural levels. ISO 26262-6:2018 describes compliance requirements for these testing levels:

Software unit testing in Table 7, method 1j

Software integration testing in Table 10, method 1a

Embedded software testing in Table 14, method 1a

The generic verification process detailed in ISO 26262-8:2018, clause 9 includes additional information on how you can systematically achieve testing for each of these levels by using planning, specification, execution, evaluation, and documentation of tests. This table shows how the Model Testing Dashboard applies to the requirements in ISO 26262-8:2018, clause 9 for the unit testing level, and complementary activities required to perform to show compliance.

| Requirement | Compliance Argument | Complementary Activities |

|---|---|---|

| 9.4.1 — Scope of verification activity | The Model Testing Dashboard applies to all safety-related and non-safety-related software units. | Not applicable |

| 9.4.2 — Verification methods | The Model Testing Dashboard provides a summary on the completion of requirements-based testing (Table 7, method 1j) including a view on test results. | Where applicable, apply one or more of these other verification methods:

|

| 9.4.3 — Methods for deriving test cases | The Model Testing Dashboard provides several ways to traverse the software unit requirements and the relevant tests, which helps you to derive tests from the requirements. | You can also derive tests by using other tools, such as Simulink® Design Verifier™. |

| 9.4.4 — Requirement and structural coverage | The Model Testing Dashboard aids in showing:

| The dashboard provides structural coverage only at the model level. You can use other tools to track the structural coverage at the code level. |

| 9.4.5 — Test environment | The Model Testing Dashboard aids in requirements-based testing at the model level. | Apply back-to-back comparison tests to verify that the behavior of the model is equivalent to the generated code. |

References:

ISO 26262-4:2018(en)Road vehicles — Functional safety — Part 4: Product development at the system level, International Standardization Organization

ISO 26262-6:2018(en)Road vehicles — Functional safety — Part 6: Product development at the software level, International Standardization Organization

ISO 26262-8:2018(en)Road vehicles — Functional safety — Part 8: Supporting processes, International Standardization Organization