Embedded AI Integration with MATLAB and Simulink

Overview

This webinar offers a comprehensive guide that covers the essentials of incorporating AI models tailored for deployment to MCUs into products designed with MATLAB and Simulink. This will help engineers who are integrating artificial intelligence into their MCU enabled products who seek to streamline the process of importing AI, testing and verifying networks within their existing models, and generating deployment-ready code.

Unlike other resources that might touch on aspects of AI integration such as compression or deployment, our offering provides a workflow that enables engineers to directly import AI already designed for MCUs, conduct thorough testing of the integrated network, and generate the necessary code for deployment onto embedded devices without the need for manual coding.

This comprehensive approach not only accelerates the development cycle but also ensures that engineers can deploy AI with greater reliability and efficiency, directly impacting the success of their projects.

Highlights

- Engineers will be able to incorporate AI into their Simulink models with confidence, ensuring that the AI functions as intended when deployed in their products.

- The process of testing and verifying the robustness of the AI network within their models is simplified, allowing for direct and efficient validation of the AI's performance.

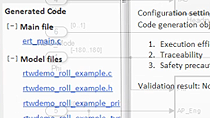

- The generation of deployment-ready code is automated, enabling engineers to rapidly move from model to deployment on MCUs without the complexities of manual code translation.

About the Presenter

Reed Axman is a Strategic Partner Specialist at Mathworks. At Mathworks, he focuses on AI deployment workflows to MCUs from providers such as ST Microelectronics, Texas Instruments, Infineon, and NXP. Reed works with our Partners and internal MathWorks teams to enable customers looking to add embedded AI capabilities to their products. He holds a Masters in Robotics and Artificial Intelligence from Arizona State University where he did research in medical soft robotics and AI control methods for soft robotics.

Recorded: 26 Sep 2024