Optimization of Mixed-Signal ICs with MATLAB

Overview

As analog mixed-signal integrated circuits (ICs) become increasingly complex, gaining deep insights and exploring innovative ideas is crucial for success. We will demonstrate how to combine MATLAB and Cadence Virtuoso to improve the design workflow and optimize the performance of your IC.

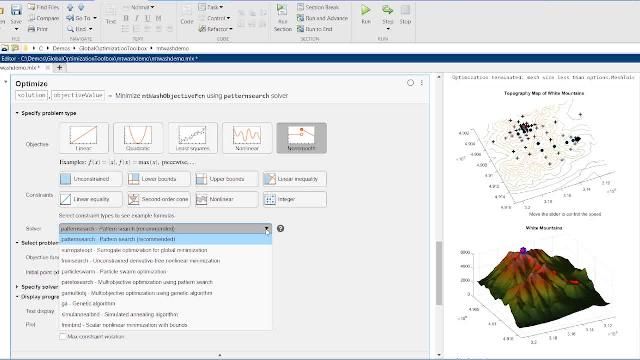

Using practical examples, we will show how to take control of design variables and parameters in Cadence Virtuoso directly from MATLAB. Run simulations programmatically, analyze the design space, and use surrogate optimization methods to improve the performance of transistor-level IC designs.

You will learn how to directly import waveforms and metrics from Cadence Virtuoso into MATLAB and leverage advanced analysis functions. Discover how to measure phase noise, identify poles and zeros, fit curves with various algorithms, and even apply your favorite MATLAB functions for in-depth analysis. Automation will be a key focus, as we showcase speeding up recurrent tasks, facilitating design space exploration, and generating comprehensive reports for seamless collaboration with your colleagues.

Last, we will export models from Simulink using behavioral languages such as SystemVerilog and VerilogA for IC design and verification. Use MATLAB to rapidly develop digital signal processing algorithms to control and calibrate the impairments of your IC implementation and optimize the performance of your mixed-signal system.

Highlights

- Explore the IC design space and control Cadence simulation from MATLAB

- Import waveforms and metrics from Cadence Virtuoso

- Analyze trends and generate reports from IC simulation results

- Optimize transistor-level design using surrogate optimization methods

- Export SystemVerilog and VerilogA models from Simulink for IC integration and verification

About the Presenter

Giorgia Zucchelli is the product manager for RF and mixed-signal at MathWorks. Before moving to this role in 2012, she was an application engineer focusing on signal processing and communications systems and specializing in analog simulation. Before joining MathWorks in 2009, Giorgia worked at NXP Semiconductors on mixed-signal verification methodologies and at Philips Research developing system-level models for innovative telecommunication systems. Giorgia has a master’s degree in electronic engineering and a doctorate in electronics for telecommunications from the University of Bologna. Her thesis dealt with modeling high-frequency RF devices.

Recorded: 17 Apr 2024