Classify Images on FPGA by Using Quantized GoogLeNet Network

This example shows how to use the Deep Learning HDL Toolbox™ to deploy a quantized GoogleNet network to classify an image. The example uses the pretrained GoogLeNet network to demonstrate transfer learning, quantization, and deployment for the quantized network. Quantization helps reduce the memory requirement of a deep neural network by quantizing weights, biases and activations of network layers to 8-bit scaled integer data types. Use MATLAB® to retrieve the prediction results.

Deploy the quantized GoogLeNet network by creating a dlhdl.Workflow object. Use the dlhdl.Workflow object to:

Generate a list of instructions, weights and biases by using the

compilemethod.Generate a programming file for the FPGA by using the

deploymethod.Retrieve the network prediction results and performance by using the

predictmethod.

GoogLeNet has been trained on over a million images and can classify images into 1000 object categories (such as keyboard, coffee mug, pencil, and many animals). The network has learned rich feature representations for a wide range of images. The network takes an image as input, and then outputs a label for the object in the image together with the probabilities for each of the object categories.

Prerequisites

Intel® Arria10 SoC development kit

Transfer Learning Using GoogLeNet

To perform classification on a new set of images, you fine-tune a pretrained GoogLeNet convolutional neural network by transfer learning. In transfer learning, you can take a pretrained network and use it as a starting point to learn a new task. Fine-tuning a network with transfer learning is usually much faster and easier than training a network with randomly initialized weights from scratch. You can quickly transfer learned features to a new task using a smaller number of training images.

Load Pretrained DAG Network

Load the pretrained dlnetwork, GoogLeNet.

net = imagePretrainedNetwork('googlenet');Use the analyzeNetwork function to obtain information about the network layers.

analyzeNetwork(net);

The first layer, the image input layer, requires input images of size 224-by-224-by-3, where 3 is the number of color channels.

inputSize = net.Layers(1).InputSize

inputSize = 1×3

224 224 3

Define Training and Validation Data Sets

This example uses the MathWorks® MerchData data set. This is a small data set containing 75 images of MathWorks merchandise, belonging to five different classes (cap, cube, playing cards, screwdriver, and torch).

unzip('MerchData.zip'); imds = imageDatastore('MerchData', ... 'IncludeSubfolders',true, ... 'LabelSource','foldernames');

Divide the data into training and validation data sets. Use 70% of the images for training and 30% for validation. splitEachLabel splits the images datastore into two new datastores.

[imdsTrain,imdsValidation] = splitEachLabel(imds,0.7,'randomized');This data set now contains 55 training images and 20 validation images. Display some sample images.

numTrainImages = numel(imdsTrain.Labels); idx = randperm(numTrainImages,16); figure for i = 1:16 subplot(4,4,i) I = readimage(imdsTrain,idx(i)); imshow(I) end

Replace Final Layers

The fully connected layer of the pretrained network net is configured for 1000 classes. This layer, loss3-classifier in GoogLeNet, contains information on how to combine the features that the network extracts into class probabilities. To retrain a pretrained network to classify new images, replace this layer with a new layer adapted to the data set.

Replace the fully connected layer with a new fully connected layer that has number of outputs equal to the number of classes. To make learning faster in the new layers than in the transferred layers, increase the WeightLearnRateFactor and BiasLearnRateFactor values of the fully connected layer.

numClasses = numel(categories(imdsTrain.Labels))

numClasses = 5

Remove 'loss3-classifier' from the network and replace it with a new fully-connected layer.

newLearnableLayer = fullyConnectedLayer(numClasses,'WeightLearnRateFactor',20,'BiasLearnRateFactor',20,'Name','newFC'); net = replaceLayer(net,'loss3-classifier',newLearnableLayer);

Train Network

The network requires input images of size 224-by-224-by-3, but the images in the image datastores have different sizes. Use an augmented image datastore to automatically resize the training images. Specify additional augmentation operations to perform on the training images: randomly flip the training images along the vertical axis, and randomly translate them up to 30 pixels horizontally and vertically. Data augmentation helps prevent the network from over-fitting and memorizing the exact details of the training images.

pixelRange = [-30 30]; imageAugmenter = imageDataAugmenter( ... 'RandXReflection',true, ... 'RandXTranslation',pixelRange, ... 'RandYTranslation',pixelRange); augimdsTrain = augmentedImageDatastore(inputSize(1:2),imdsTrain, ... 'DataAugmentation',imageAugmenter);

To automatically resize the validation images without performing further data augmentation, use an augmented image datastore without specifying any additional preprocessing operations.

augimdsValidation = augmentedImageDatastore(inputSize(1:2),imdsValidation);

Specify the training options. For transfer learning, keep the features from the early layers of the pretrained network (the transferred layer weights). To slow down learning in the transferred layers, set the initial learning rate to a small value. In the previous step, the learning rate factors were increased for the fully connected layer to speed up learning in the new final layers. This combination of learning rate settings results in fast learning only in the new layers and slower learning in the other layers. When performing transfer learning, you do not need to train for as many epochs. An epoch is a full training cycle on the entire training data set. Specify the mini-batch size to be 11. The software validates the network every ValidationFrequency iterations during training.

options = trainingOptions('sgdm', ... 'MiniBatchSize',11, ... 'MaxEpochs',5, ... 'InitialLearnRate',2e-4, ... 'Shuffle','every-epoch', ... 'ValidationData',augimdsValidation, ... 'ValidationFrequency',3, ... 'Verbose',false, ... 'Plots','training-progress');

Train the network that consists of the transferred and new layers. By default, trainnet uses a GPU if one is available (requires Parallel Computing Toolbox™ and a supported GPU device. Otherwise, the network uses a CPU (requires MATLAB Coder™ Interface for Deep Learning Libraries™). You can also specify the execution environment by using the 'ExecutionEnvironment' name-value argument of trainingOptions.

netTransfer = trainnet(augimdsTrain,net,'crossentropy',options);

Create dlquantizer Object

Create a quantized network by using the dlquantizer object. Set the target execution environment to FPGA.

dlQuantObj = dlquantizer(netTransfer,'ExecutionEnvironment','FPGA');

Calibrate Quantized Network

Use the calibrate function to exercise the network by using sample inputs to collect the range information. The calibrate function exercises the network and collects the dynamic ranges for the learnable parameters of the convolution and fully connected layers of the network.

For best quantization results, the calibration data must be a representative of actual inputs that are predicted by the network.

dlQuantObj.calibrate(augimdsTrain);

Set Up Intel Quartus Prime Standard

Set the synthesis tool path to point to an installed Intel Quartus® Prime Standard Edition 20.1 executable file. You must have already installed Altera® Quartus II.

% hdlsetuptoolpath('ToolName','Altera Quartus II','ToolPath','C:\intel\20.1\quartus\bin\quartus.exe');Create Target Object

Create a target object with a custom name for your target device and an interface to connect your target device to the host computer. Interface options are JTAG and Ethernet.

hTarget = dlhdl.Target('Intel','Interface','JTAG');

Generate Bitstream to Run Network

The GoogleNet network consists of multiple Cross Channel Normalization layers. To support this layer on hardware, the 'LRNBlockGeneration' property of the conv module needs to be turned on in the bitstream used for FPGA inference. The shipping arria10soc_int8 bitstream does not have 'LRNBlockGeneration' property turned on. A new bitstream can be generated using the following lines of code. The generated bitstream can be used along with a workflow object for inference.

Update the processor configuration with 'LRNBlockGeneration' property turned on and 'SegmentationBlockGeneration' property turned off. Turn off 'SegmentationBlockGeneration' to fit the Deep Learning IP on the FPGA and avoid overutilization of resources.

% hPC = dlhdl.ProcessorConfig('Bitstream', 'arria10soc_int8'); % hPC.setModuleProperty('conv', 'LRNBlockGeneration', 'on'); % hPC.setModuleProperty('conv', 'SegmentationBlockGeneration', 'off'); % dlhdl.buildProcessor(hPC)

To learn how to use the generated bitstream file, see Generate Custom Bitstream.

Create Workflow Object

Create an object of the dlhdl.Workflow class. Specify dlQuantObj as the network. Make sure to use the generated bitstream which enables processing of Cross Channel Normalization layers on FPGA. In this example, the target FPGA board is the Intel Arria10 SOC board and the generated bitstream uses the int8 data type.

hW = dlhdl.Workflow('network', dlQuantObj, 'Bitstream', 'dlprocessor.sof','Target',hTarget);

Compile Workflow Object

To compile the GoogLeNet network, run the compile function of the dlhdl.Workflow object.

dn = hW.compile

### Compiling network for Deep Learning FPGA prototyping ...

### Targeting FPGA bitstream /home/bamorin/Documents/MATLAB/ExampleManager/bamorin.InvestigateFailures/deeplearning_shared-ex40925327/dlprocessor.sof.

### An output layer called 'Output1_prob' of type 'nnet.cnn.layer.RegressionOutputLayer' has been added to the provided network. This layer performs no operation during prediction and thus does not affect the output of the network.

### The network includes the following layers:

### Notice: The layer 'data' with type 'nnet.cnn.layer.ImageInputLayer' is implemented in software.

### Notice: The layer 'prob' with type 'nnet.cnn.layer.SoftmaxLayer' is implemented in software.

### Notice: The layer 'Output1_prob' with type 'nnet.cnn.layer.RegressionOutputLayer' is implemented in software.

### Compiling layer group: conv1-7x7_s2>>pool2-3x3_s2 ...

### Compiling layer group: conv1-7x7_s2>>pool2-3x3_s2 ... complete.

### Compiling layer group: inception_3a-1x1>>inception_3a-relu_1x1 ...

### Compiling layer group: inception_3a-1x1>>inception_3a-relu_1x1 ... complete.

### Compiling layer group: inception_3a-3x3_reduce>>inception_3a-relu_3x3 ...

### Compiling layer group: inception_3a-3x3_reduce>>inception_3a-relu_3x3 ... complete.

### Compiling layer group: inception_3a-5x5_reduce>>inception_3a-relu_5x5 ...

### Compiling layer group: inception_3a-5x5_reduce>>inception_3a-relu_5x5 ... complete.

### Compiling layer group: inception_3a-pool>>inception_3a-relu_pool_proj ...

### Compiling layer group: inception_3a-pool>>inception_3a-relu_pool_proj ... complete.

### Compiling layer group: inception_3b-1x1>>inception_3b-relu_1x1 ...

### Compiling layer group: inception_3b-1x1>>inception_3b-relu_1x1 ... complete.

### Compiling layer group: inception_3b-3x3_reduce>>inception_3b-relu_3x3 ...

### Compiling layer group: inception_3b-3x3_reduce>>inception_3b-relu_3x3 ... complete.

### Compiling layer group: inception_3b-5x5_reduce>>inception_3b-relu_5x5 ...

### Compiling layer group: inception_3b-5x5_reduce>>inception_3b-relu_5x5 ... complete.

### Compiling layer group: inception_3b-pool>>inception_3b-relu_pool_proj ...

### Compiling layer group: inception_3b-pool>>inception_3b-relu_pool_proj ... complete.

### Compiling layer group: pool3-3x3_s2 ...

### Compiling layer group: pool3-3x3_s2 ... complete.

### Compiling layer group: inception_4a-1x1>>inception_4a-relu_1x1 ...

### Compiling layer group: inception_4a-1x1>>inception_4a-relu_1x1 ... complete.

### Compiling layer group: inception_4a-3x3_reduce>>inception_4a-relu_3x3 ...

### Compiling layer group: inception_4a-3x3_reduce>>inception_4a-relu_3x3 ... complete.

### Compiling layer group: inception_4a-5x5_reduce>>inception_4a-relu_5x5 ...

### Compiling layer group: inception_4a-5x5_reduce>>inception_4a-relu_5x5 ... complete.

### Compiling layer group: inception_4a-pool>>inception_4a-relu_pool_proj ...

### Compiling layer group: inception_4a-pool>>inception_4a-relu_pool_proj ... complete.

### Compiling layer group: inception_4b-1x1>>inception_4b-relu_1x1 ...

### Compiling layer group: inception_4b-1x1>>inception_4b-relu_1x1 ... complete.

### Compiling layer group: inception_4b-3x3_reduce>>inception_4b-relu_3x3 ...

### Compiling layer group: inception_4b-3x3_reduce>>inception_4b-relu_3x3 ... complete.

### Compiling layer group: inception_4b-5x5_reduce>>inception_4b-relu_5x5 ...

### Compiling layer group: inception_4b-5x5_reduce>>inception_4b-relu_5x5 ... complete.

### Compiling layer group: inception_4b-pool>>inception_4b-relu_pool_proj ...

### Compiling layer group: inception_4b-pool>>inception_4b-relu_pool_proj ... complete.

### Compiling layer group: inception_4c-1x1>>inception_4c-relu_1x1 ...

### Compiling layer group: inception_4c-1x1>>inception_4c-relu_1x1 ... complete.

### Compiling layer group: inception_4c-3x3_reduce>>inception_4c-relu_3x3 ...

### Compiling layer group: inception_4c-3x3_reduce>>inception_4c-relu_3x3 ... complete.

### Compiling layer group: inception_4c-5x5_reduce>>inception_4c-relu_5x5 ...

### Compiling layer group: inception_4c-5x5_reduce>>inception_4c-relu_5x5 ... complete.

### Compiling layer group: inception_4c-pool>>inception_4c-relu_pool_proj ...

### Compiling layer group: inception_4c-pool>>inception_4c-relu_pool_proj ... complete.

### Compiling layer group: inception_4d-1x1>>inception_4d-relu_1x1 ...

### Compiling layer group: inception_4d-1x1>>inception_4d-relu_1x1 ... complete.

### Compiling layer group: inception_4d-3x3_reduce>>inception_4d-relu_3x3 ...

### Compiling layer group: inception_4d-3x3_reduce>>inception_4d-relu_3x3 ... complete.

### Compiling layer group: inception_4d-5x5_reduce>>inception_4d-relu_5x5 ...

### Compiling layer group: inception_4d-5x5_reduce>>inception_4d-relu_5x5 ... complete.

### Compiling layer group: inception_4d-pool>>inception_4d-relu_pool_proj ...

### Compiling layer group: inception_4d-pool>>inception_4d-relu_pool_proj ... complete.

### Compiling layer group: inception_4e-1x1>>inception_4e-relu_1x1 ...

### Compiling layer group: inception_4e-1x1>>inception_4e-relu_1x1 ... complete.

### Compiling layer group: inception_4e-3x3_reduce>>inception_4e-relu_3x3 ...

### Compiling layer group: inception_4e-3x3_reduce>>inception_4e-relu_3x3 ... complete.

### Compiling layer group: inception_4e-5x5_reduce>>inception_4e-relu_5x5 ...

### Compiling layer group: inception_4e-5x5_reduce>>inception_4e-relu_5x5 ... complete.

### Compiling layer group: inception_4e-pool>>inception_4e-relu_pool_proj ...

### Compiling layer group: inception_4e-pool>>inception_4e-relu_pool_proj ... complete.

### Compiling layer group: pool4-3x3_s2 ...

### Compiling layer group: pool4-3x3_s2 ... complete.

### Compiling layer group: inception_5a-1x1>>inception_5a-relu_1x1 ...

### Compiling layer group: inception_5a-1x1>>inception_5a-relu_1x1 ... complete.

### Compiling layer group: inception_5a-3x3_reduce>>inception_5a-relu_3x3 ...

### Compiling layer group: inception_5a-3x3_reduce>>inception_5a-relu_3x3 ... complete.

### Compiling layer group: inception_5a-5x5_reduce>>inception_5a-relu_5x5 ...

### Compiling layer group: inception_5a-5x5_reduce>>inception_5a-relu_5x5 ... complete.

### Compiling layer group: inception_5a-pool>>inception_5a-relu_pool_proj ...

### Compiling layer group: inception_5a-pool>>inception_5a-relu_pool_proj ... complete.

### Compiling layer group: inception_5b-1x1>>inception_5b-relu_1x1 ...

### Compiling layer group: inception_5b-1x1>>inception_5b-relu_1x1 ... complete.

### Compiling layer group: inception_5b-3x3_reduce>>inception_5b-relu_3x3 ...

### Compiling layer group: inception_5b-3x3_reduce>>inception_5b-relu_3x3 ... complete.

### Compiling layer group: inception_5b-5x5_reduce>>inception_5b-relu_5x5 ...

### Compiling layer group: inception_5b-5x5_reduce>>inception_5b-relu_5x5 ... complete.

### Compiling layer group: inception_5b-pool>>inception_5b-relu_pool_proj ...

### Compiling layer group: inception_5b-pool>>inception_5b-relu_pool_proj ... complete.

### Compiling layer group: pool5-7x7_s1 ...

### Compiling layer group: pool5-7x7_s1 ... complete.

### Compiling layer group: newFC ...

### Compiling layer group: newFC ... complete.

### Allocating external memory buffers:

offset_name offset_address allocated_space

_______________________ ______________ ________________

"InputDataOffset" "0x00000000" "11.5 MB"

"OutputResultOffset" "0x00b7c000" "4.0 kB"

"SchedulerDataOffset" "0x00b7d000" "656.0 kB"

"SystemBufferOffset" "0x00c21000" "1.6 MB"

"InstructionDataOffset" "0x00dae000" "5.1 MB"

"ConvWeightDataOffset" "0x012ca000" "26.3 MB"

"FCWeightDataOffset" "0x02d12000" "20.0 kB"

"EndOffset" "0x02d17000" "Total: 45.1 MB"

### Network compilation complete.

dn = struct with fields:

weights: [1×1 struct]

instructions: [1×1 struct]

registers: [1×1 struct]

syncInstructions: [1×1 struct]

constantData: {}

ddrInfo: [1×1 struct]

resourceTable: [6×2 table]

Program Bitstream onto FPGA and Download Network Weights

To deploy the network on the Intel Arria10 SoC hardware, run the deploy function of the dlhdl.Workflow object. This function uses the output of the compile function to program the FPGA board by using the programming file. The function also downloads the network weights and biases. The deploy function starts programming the FPGA device, displays progress messages, and the time it takes to deploy the network.

hW.deploy

### Programming FPGA Bitstream using Ethernet... ### Attempting to connect to the hardware board at 172.21.89.235... ### Connection successful ### Programming FPGA device on Intel SoC hardware board at 172.21.89.235... ### Attempting to connect to the hardware board at 172.21.89.235... ### Connection successful ### Copying FPGA programming files to SD card... ### Setting FPGA bitstream and devicetree for boot... WARNING: Uboot script u-boot.scr detected, this may override boot settings # Copying Bitstream dlprocessor.core.rbf to /mnt/hdlcoder_rd # Set Bitstream to hdlcoder_rd/dlprocessor.core.rbf # Copying Devicetree devicetree_dlhdl.dtb to /mnt/hdlcoder_rd # Set Devicetree to hdlcoder_rd/devicetree_dlhdl.dtb # Set up boot for Reference Design: 'LIBIIO CNN system with 3 AXI4 Master' ### Rebooting Intel SoC at 172.21.89.235... ### Reboot may take several seconds... ### Attempting to connect to the hardware board at 172.21.89.235... ### Connection successful ### Programming the FPGA bitstream has been completed successfully. ### Loading weights to Conv Processor. ### Conv Weights loaded. Current time is 30-Aug-2024 17:20:42 ### Loading weights to FC Processor. ### FC Weights loaded. Current time is 30-Aug-2024 17:20:42

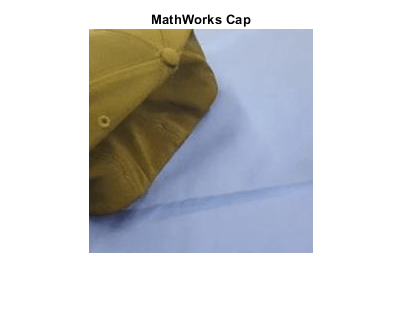

Load Example Image

I = imresize(readimage(imdsValidation,1),[224 224]); figure imshow(I)

Retrieve Image Prediction

Execute the predict function of the dlhdl.Workflow object and display the prediction results.

I = dlarray(single(I), 'SSCB'); [prediction, ~] = hW.predict(I,'Profile','off');

### Finished writing input activations. ### Running single input activation.

label = scores2label(prediction,categories(imdsTrain.Labels))

label = categorical

MathWorks Cap

title(string(label));

Retrieve Deployed Network Performance

View the performance of the deployed network by using the predict method with the Profile argument set to on.

[~, speed] = hW.predict(I,'Profile','on')

### Finished writing input activations.

### Running single input activation.

Deep Learning Processor Profiler Performance Results

LastFrameLatency(cycles) LastFrameLatency(seconds) FramesNum Total Latency Frames/s

------------- ------------- --------- --------- ---------

Network 15759503 0.10506 1 15768622 9.5

conv1-7x7_s2 1140266 0.00760

pool1-3x3_s2 226586 0.00151

pool1-norm1 311786 0.00208

conv2-3x3_reduce 278554 0.00186

conv2-3x3 826869 0.00551

conv2-norm2 951786 0.00635

pool2-3x3_s2 208085 0.00139

inception_3a-1x1 198671 0.00132

inception_3a-3x3_reduce 282091 0.00188

inception_3a-3x3 196937 0.00131

inception_3a-5x5_reduce 74040 0.00049

inception_3a-5x5 35333 0.00024

inception_3a-pool 94565 0.00063

inception_3a-pool_proj 115441 0.00077

inception_3b-1x1 653551 0.00436

inception_3b-3x3_reduce 654292 0.00436

inception_3b-3x3 368280 0.00246

inception_3b-5x5_reduce 217445 0.00145

inception_3b-5x5 179163 0.00119

inception_3b-pool 179780 0.00120

inception_3b-pool_proj 362604 0.00242

pool3-3x3_s2 196154 0.00131

inception_4a-1x1 336707 0.00224

inception_4a-3x3_reduce 184070 0.00123

inception_4a-3x3 84818 0.00057

inception_4a-5x5_reduce 56369 0.00038

inception_4a-5x5 14416 0.00010

inception_4a-pool 77393 0.00052

inception_4a-pool_proj 132997 0.00089

inception_4b-1x1 303833 0.00203

inception_4b-3x3_reduce 222853 0.00149

inception_4b-3x3 103657 0.00069

inception_4b-5x5_reduce 73094 0.00049

inception_4b-5x5 25836 0.00017

inception_4b-pool 82589 0.00055

inception_4b-pool_proj 141626 0.00094

inception_4c-1x1 249712 0.00166

inception_4c-3x3_reduce 249732 0.00166

inception_4c-3x3 130976 0.00087

inception_4c-5x5_reduce 73554 0.00049

inception_4c-5x5 26077 0.00017

inception_4c-pool 82597 0.00055

inception_4c-pool_proj 140968 0.00094

inception_4d-1x1 223088 0.00149

inception_4d-3x3_reduce 277099 0.00185

inception_4d-3x3 161609 0.00108

inception_4d-5x5_reduce 86784 0.00058

inception_4d-5x5 32811 0.00022

inception_4d-pool 83197 0.00055

inception_4d-pool_proj 141023 0.00094

inception_4e-1x1 480969 0.00321

inception_4e-3x3_reduce 313328 0.00209

inception_4e-3x3 195874 0.00131

inception_4e-5x5_reduce 90182 0.00060

inception_4e-5x5 63293 0.00042

inception_4e-pool 85193 0.00057

inception_4e-pool_proj 256940 0.00171

pool4-3x3_s2 82528 0.00055

inception_5a-1x1 397644 0.00265

inception_5a-3x3_reduce 257289 0.00172

inception_5a-3x3 102006 0.00068

inception_5a-5x5_reduce 70980 0.00047

inception_5a-5x5 33279 0.00022

inception_5a-pool 54257 0.00036

inception_5a-pool_proj 210584 0.00140

inception_5b-1x1 583897 0.00389

inception_5b-3x3_reduce 303907 0.00203

inception_5b-3x3 143676 0.00096

inception_5b-5x5_reduce 94133 0.00063

inception_5b-5x5 47694 0.00032

inception_5b-pool 54081 0.00036

inception_5b-pool_proj 211324 0.00141

pool5-7x7_s1 72201 0.00048

newFC 1894 0.00001

* The clock frequency of the DL processor is: 150MHz

speed=75×5 table

Latency(cycles) Latency(seconds) NumFrames Total Latency(cycles) Frame/s

_______________ ________________ _________ _____________________ ________

Network 1.576e+07 0.10506 "1" "15768622" "9.5126"

____conv1-7x7_s2 1.1403e+06 0.0076018 "" "" ""

____pool1-3x3_s2 2.2659e+05 0.0015106 "" "" ""

____pool1-norm1 3.1179e+05 0.0020786 "" "" ""

____conv2-3x3_reduce 2.7855e+05 0.001857 "" "" ""

____conv2-3x3 8.2687e+05 0.0055125 "" "" ""

____conv2-norm2 9.5179e+05 0.0063452 "" "" ""

____pool2-3x3_s2 2.0808e+05 0.0013872 "" "" ""

____inception_3a-1x1 1.9867e+05 0.0013245 "" "" ""

____inception_3a-3x3_reduce 2.8209e+05 0.0018806 "" "" ""

____inception_3a-3x3 1.9694e+05 0.0013129 "" "" ""

____inception_3a-5x5_reduce 74040 0.0004936 "" "" ""

____inception_3a-5x5 35333 0.00023555 "" "" ""

____inception_3a-pool 94565 0.00063043 "" "" ""

____inception_3a-pool_proj 1.1544e+05 0.00076961 "" "" ""

____inception_3b-1x1 6.5355e+05 0.004357 "" "" ""

⋮

The speed table contains the latency information for every layer, total network latency, and the overall network performance in frames per second (FPS). For more information, see Profile Inference Run.

See Also

dlhdl.Workflow | dlhdl.Target | compile | deploy | predict | dlquantizer | dlquantizationOptions | calibrate | validate