yolov4ObjectDetectorMonoCamera

Detect objects in monocular camera using YOLO v4 deep learning detector

Since R2022a

Description

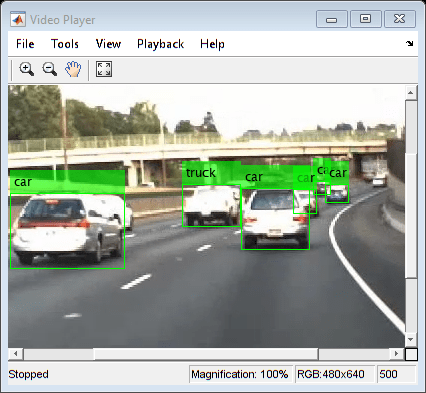

The yolov4ObjectDetectorMonoCamera object contains information about you only look

once version 4 (YOLO v4) object detector that is configured for use with a monocular camera

sensor. To detect objects in an image captured by the camera, pass the detector to the

detect object

function.

When using the detect object function with a

yolov4ObjectDetectorMonoCamera object, use of a CUDA®-enabled NVIDIA® GPU is highly recommended. The GPU reduces computation time significantly. Usage

of the GPU requires Parallel Computing Toolbox™. For information about the supported compute capabilities, see GPU Computing Requirements (Parallel Computing Toolbox).

Creation

Create a

yolov4ObjectDetectorobject by calling thetrainYOLOv4ObjectDetectorfunction with training data (requires Deep Learning Toolbox™).detector = trainYOLOv4ObjectDetector(trainingData,____);

Create a

monoCameraobject to model the monocular camera sensor.sensor = monoCamera(____);

Create a

yolov4ObjectDetectorMonoCameraobject by passing the detector and sensor as inputs to theconfigureDetectorMonoCamerafunction. The configured detector inherits property values from the original detector.configuredDetector = configureDetectorMonoCamera(detector,sensor,____);

Properties

Object Functions

detect | Detect objects using YOLO v4 object detector configured for monocular camera |

Examples

Version History

Introduced in R2022a