Synchronize GPS, Camera, and Actor Track Data for Scenario Generation

This example shows how to synchronize multiple types of sensor data, such as GPS data, camera data, and actor track data, in preparation for using that data for scenario generation.

The Scenario Builder for Automated Driving Toolbox™ support package enables you to generate RoadRunner scenes or scenarios from multiple sources of recorded sensor data. For more information on how to generate a RoadRunner scene and scenario, see the Generate RoadRunner Scene Using Processed Camera Data and GPS Data example and the Generate RoadRunner Scenario from Recorded Sensor Data example, respectively. Data collected from multiple sensors is often recorded at varying times and with nonuniform sampling frequencies. To fuse such data for scenario generation, you must perform data preprocessing such as cropping and synchronization.

In this example, you:

Normalize the timestamps of GPS data, camera data, and actor track data with a common reference time.

Crop the normalized data from the sensor data objects to a common reference time interval.

Synchronize the normalized and cropped data to resample sensor data objects to a common sampling frequency.

This example requires the Scenario Builder for Automated Driving Toolbox™ support package. Check if the support package is installed and, if it is not installed, install it using the Add-On explorer. For more information on installing support packages, see Get and Manage Add-Ons.

checkIfScenarioBuilderIsInstalled

Load Sensor Data

Download a ZIP file containing a subset of sensor data from the PandaSet data set, and then unzip the file. This file contains 400 samples each of GPS data, actor track list data, and camera information.

dataFolder = tempdir; dataFilename = "PandasetSeq90_94.zip"; url = "https://ssd.mathworks.com/supportfiles/driving/data/" + dataFilename; filePath = fullfile(dataFolder,dataFilename); if ~isfile(filePath) websave(filePath,url); end unzip(filePath,dataFolder) dataset = fullfile(dataFolder,"PandasetSeq90_94"); data = load(fullfile(dataset,"sensorData.mat"))

data = struct with fields:

ActorTracks: [400×5 table]

Camera: [400×2 table]

CameraHeight: 1.6600

GPS: [400×4 table]

Intrinsics: [1×1 struct]

To demonstrate the selection of overlapping timestamps and synchronization, this example adds a fixed time offset of 10 seconds and increases the number of samples from 400 to 4000 in the GPS data. To make the GPS timestamps non-overlapping and non-synchronized with other sensor data, add a time offset and increase the sampling rate of the GPS data by using the helperAddOffsetAndSamplesToGPS helper function. Observe that the GPS data has 4000 samples after resampling.

timeOffset = 10; newGPSSamples = 4000; data.GPS = helperAddOffsetAndSamplesToGPS(data.GPS,timeOffset,newGPSSamples)

data = struct with fields:

ActorTracks: [400×5 table]

Camera: [400×2 table]

CameraHeight: 1.6600

GPS: [4000×4 table]

Intrinsics: [1×1 struct]

Load the resampled GPS data into the workspace. The loaded gpsData is a table with these columns:

timeStamp— Time, in seconds, at which the GPS data was collected.latitude— Latitude coordinate value of the ego vehicle. Units are in degrees.longitude— Longitude coordinate value of the ego vehicle. Units are in degrees.altitude— Altitude coordinate value of the ego vehicle. Units are in meters.

gpsData = data.GPS

gpsData=4000×4 table

timeStamp latitude longitude altitude

__________ ________ _________ ________

1.5576e+09 37.374 -122.06 42.858

1.5576e+09 37.374 -122.06 42.858

1.5576e+09 37.374 -122.06 42.858

1.5576e+09 37.374 -122.06 42.858

1.5576e+09 37.374 -122.06 42.858

1.5576e+09 37.374 -122.06 42.858

1.5576e+09 37.374 -122.06 42.858

1.5576e+09 37.374 -122.06 42.858

1.5576e+09 37.374 -122.06 42.858

1.5576e+09 37.374 -122.06 42.858

1.5576e+09 37.374 -122.06 42.858

1.5576e+09 37.374 -122.06 42.858

1.5576e+09 37.374 -122.06 42.857

1.5576e+09 37.374 -122.06 42.857

1.5576e+09 37.374 -122.06 42.856

1.5576e+09 37.374 -122.06 42.856

⋮

Create a GPSData object by using the recordedSensorData function to store the GPS data.

gps = recordedSensorData("gps",gpsData.timeStamp,gpsData.latitude,gpsData.longitude,gpsData.altitude)gps =

GPSData with properties:

Name: ''

NumSamples: 4000

Duration: 39.8997

SampleRate: 100.2513

SampleTime: 0.0100

Timestamps: [4000×1 double]

Latitude: [4000×1 double]

Longitude: [4000×1 double]

Altitude: [4000×1 double]

Attributes: []

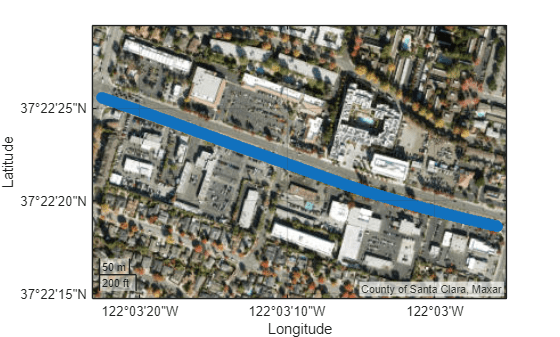

Plot the GPS data on a satellite map.

plot(gps,Basemap="satellite")

Load the camera data recorded from a forward-facing monocular camera mounted on the ego vehicle.

The camera data is a table with two columns:

timeStamp— Time, in seconds, at which the image data was capturedfileName— Filenames of the images in the data set

cameraData = data.Camera

cameraData=400×2 table

timeStamp fileName

__________ ____________

1.5576e+09 {'0001.jpg'}

1.5576e+09 {'0002.jpg'}

1.5576e+09 {'0003.jpg'}

1.5576e+09 {'0004.jpg'}

1.5576e+09 {'0005.jpg'}

1.5576e+09 {'0006.jpg'}

1.5576e+09 {'0007.jpg'}

1.5576e+09 {'0008.jpg'}

1.5576e+09 {'0009.jpg'}

1.5576e+09 {'0010.jpg'}

1.5576e+09 {'0011.jpg'}

1.5576e+09 {'0012.jpg'}

1.5576e+09 {'0013.jpg'}

1.5576e+09 {'0014.jpg'}

1.5576e+09 {'0015.jpg'}

1.5576e+09 {'0016.jpg'}

⋮

Create a CameraData object by using the recordedSensorData function to store the camera data. Specify a path to your images. In this example, the images are stored in the Camera folder of the dataset directory.

imageFolder = fullfile(dataset,"Camera"); cam = recordedSensorData("camera",cameraData.timeStamp,imageFolder)

cam =

CameraData with properties:

Name: ''

NumSamples: 400

Duration: 39.8997

SampleRate: 10.0251

SampleTime: 0.1000

Timestamps: [400×1 double]

Frames: {400×1 cell}

SensorParameters: []

Attributes: []

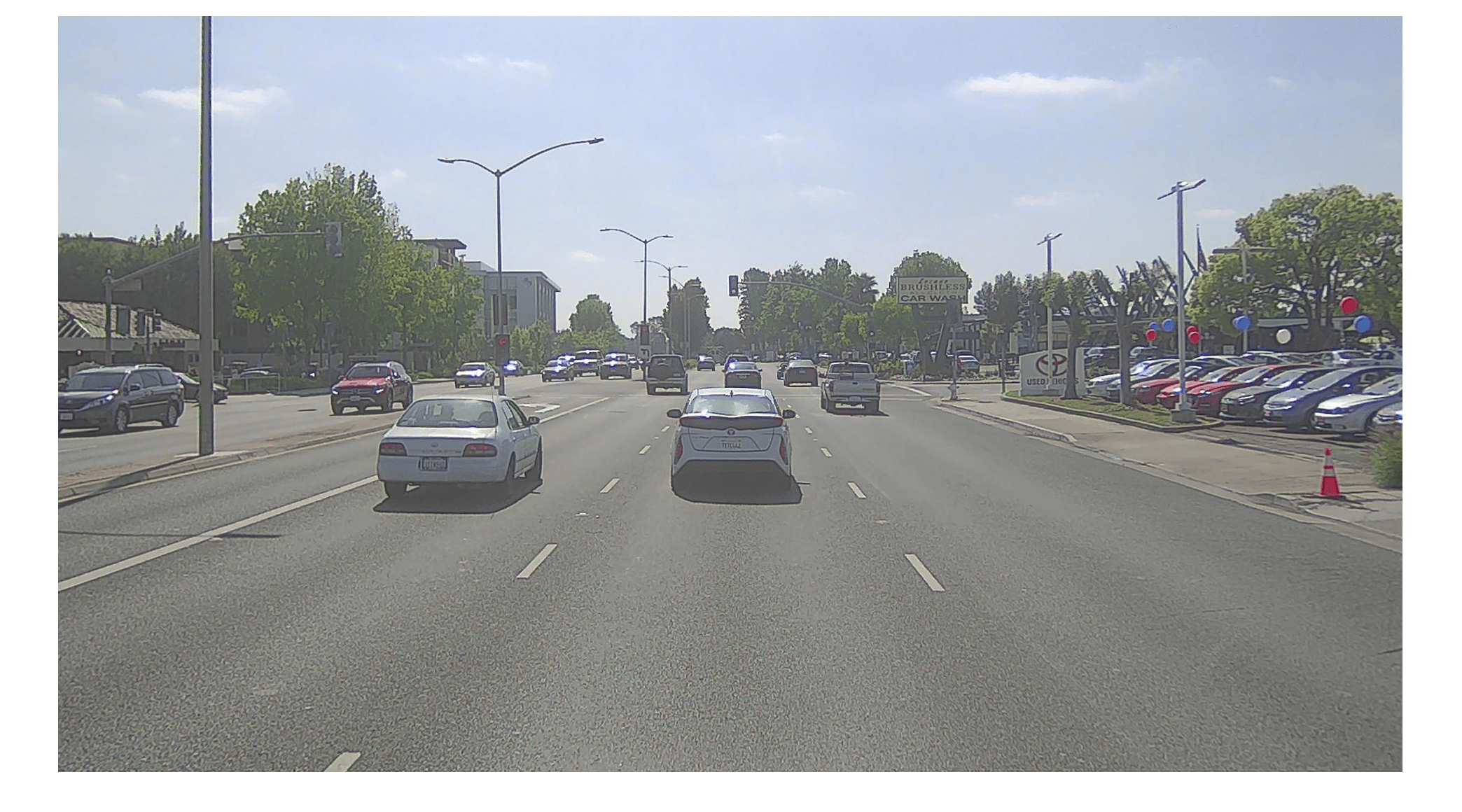

Visualize the camera sequence.

play(cam,WaitTime=1)

Load the recorded actor track data. The actor track data is a table with these columns:

timeStamp— Time, in seconds, at which the actor track data was recorded.TrackIDs— Track IDs of actors, detected at each timestamp.Positions— Positions of the actors at each timestamp, represented in the form [x y z] in the Coordinate Systems in Automated Driving Toolbox. Units are in meters.Orientation— Orientations of the actors at each time stamp, represented in the form [yaw pitch roll] in the Coordinate Systems in Automated Driving Toolbox. Units are in degrees.Dimension— Dimensions of the actors at each timestamp, represented in the form [length width height]. Units are in meters.

actorListData = data.ActorTracks

actorListData=400×5 table

timeStamp TrackIDs Positions Orientation Dimension

__________ __________________________________________ ____________ ____________ ____________

1.5576e+09 {["2" "3" "4" "5" "6" "7"]} {6×3 double} {6×3 double} {6×3 double}

1.5576e+09 {["2" "3" "4" "5" "6" "7"]} {6×3 double} {6×3 double} {6×3 double}

1.5576e+09 {["2" "3" "4" "5" "6" "7"]} {6×3 double} {6×3 double} {6×3 double}

1.5576e+09 {["2" "3" "4" "5" "6" "7"]} {6×3 double} {6×3 double} {6×3 double}

1.5576e+09 {["2" "3" "4" "5" "6" "7"]} {6×3 double} {6×3 double} {6×3 double}

1.5576e+09 {["2" "3" "4" "5" "6" "7"]} {6×3 double} {6×3 double} {6×3 double}

1.5576e+09 {["2" "3" "4" "5" "6" "7"]} {6×3 double} {6×3 double} {6×3 double}

1.5576e+09 {["2" "3" "4" "5" "6" "7"]} {6×3 double} {6×3 double} {6×3 double}

1.5576e+09 {["2" "3" "4" "5" "6" "7"]} {6×3 double} {6×3 double} {6×3 double}

1.5576e+09 {["2" "3" "4" "5" "6" "7"]} {6×3 double} {6×3 double} {6×3 double}

1.5576e+09 {["3" "5" "6" "7" ]} {4×3 double} {4×3 double} {4×3 double}

1.5576e+09 {["3" "5" "6" "7" ]} {4×3 double} {4×3 double} {4×3 double}

1.5576e+09 {["3" "5" "6" "7" ]} {4×3 double} {4×3 double} {4×3 double}

1.5576e+09 {["3" "5" "6" "7" ]} {4×3 double} {4×3 double} {4×3 double}

1.5576e+09 {["3" "5" "6" "7" ]} {4×3 double} {4×3 double} {4×3 double}

1.5576e+09 {["3" "5" "6" "7" ]} {4×3 double} {4×3 double} {4×3 double}

⋮

Create an ActorTrackData object by using the recordedSensorData function to store the actor track data.

actorList = recordedSensorData("actorTrack",actorListData.timeStamp, ... actorListData.TrackIDs,actorListData.Positions, ... Dimension=actorListData.Dimension,Orientation=actorListData.Orientation)

actorList =

ActorTrackData with properties:

Name: ''

NumSamples: 400

Duration: 39.8997

SampleRate: 10.0251

SampleTime: 0.1000

Timestamps: [400×1 double]

TrackID: {400×1 cell}

Category: []

Position: {400×1 cell}

Dimension: {400×1 cell}

Orientation: {400×1 cell}

Velocity: []

Speed: []

Age: []

Attributes: []

UniqueTrackIDs: [20×1 string]

UniqueCategories: []

Visualize the actor track data.

play(actorList,XLim=[0 90],YLim=[-30 30])

Normalize Recorded Sensor Data

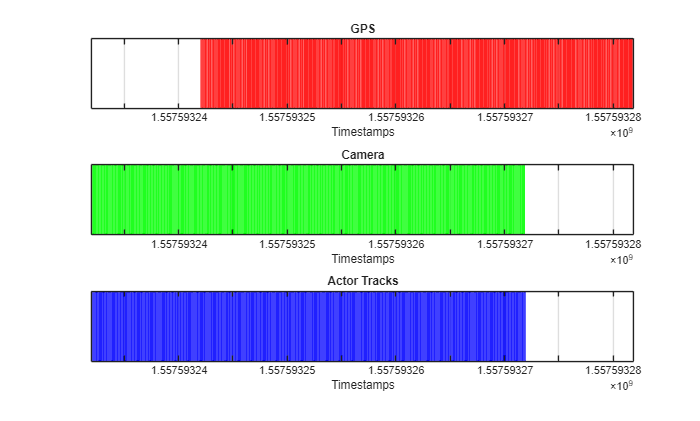

Visualize the timestamps of the GPS data, camera data, and actor track data by using the helperViewMultiSensorTimes helper function.

The plot indicates that:

Some GPS data samples have later timestamps than either the camera or actor track data samples. The GPS data begins later and ends later compared to the camera and actor track data.

Compared to the camera and actor track data, the GPS data has more samples.

All of the GPS, camera, and actor track data samples have timestamps in the POSIX® format.

helperViewMultiSensorTimes(gps.Timestamps,cam.Timestamps,actorList.Timestamps)

Normalize the timestamps of the camera data by using the normalizeTimestamps object function. Use the camera sensor start time as a reference time to normalize the GPS data and actor track data for better visualization of the time differences, in seconds.

timeRef = normalizeTimestamps(cam); normalizeTimestamps(gps,timeRef); normalizeTimestamps(actorList,timeRef);

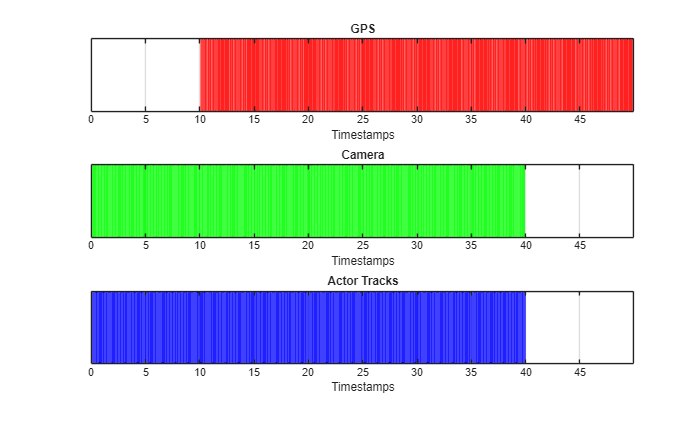

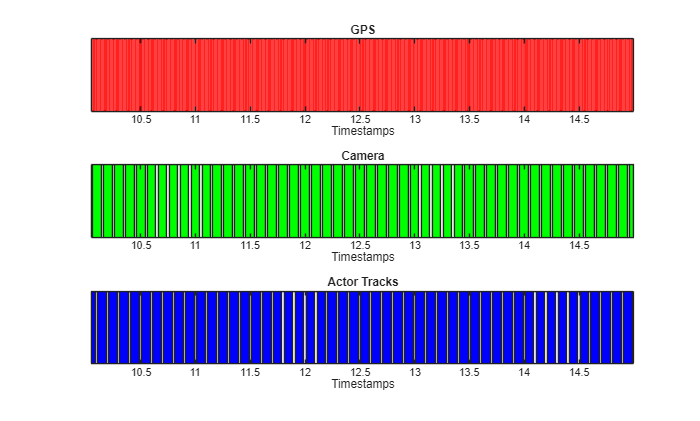

Visualize the normalized timestamps of the GPS data, camera data, and actor track data by using the helperViewMultiSensorTimes helper function. The figure indicates that the GPS data has a 10 second time offset compared to the camera and actor track data.

helperViewMultiSensorTimes(gps.Timestamps,cam.Timestamps,actorList.Timestamps)

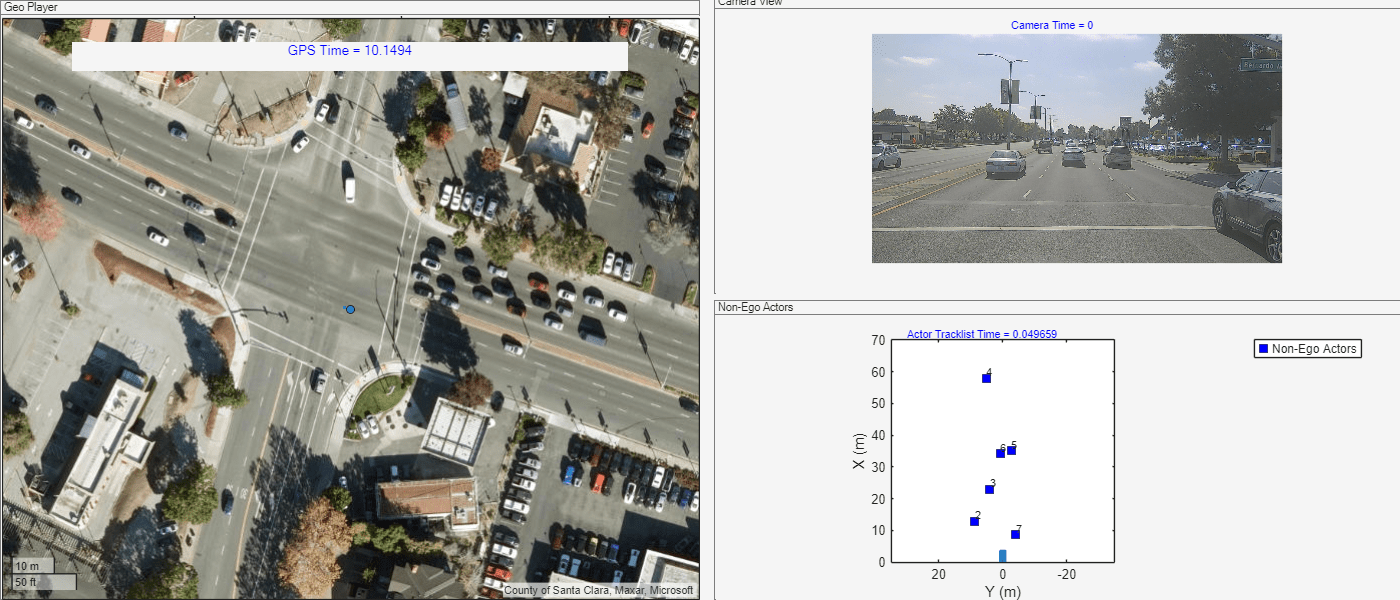

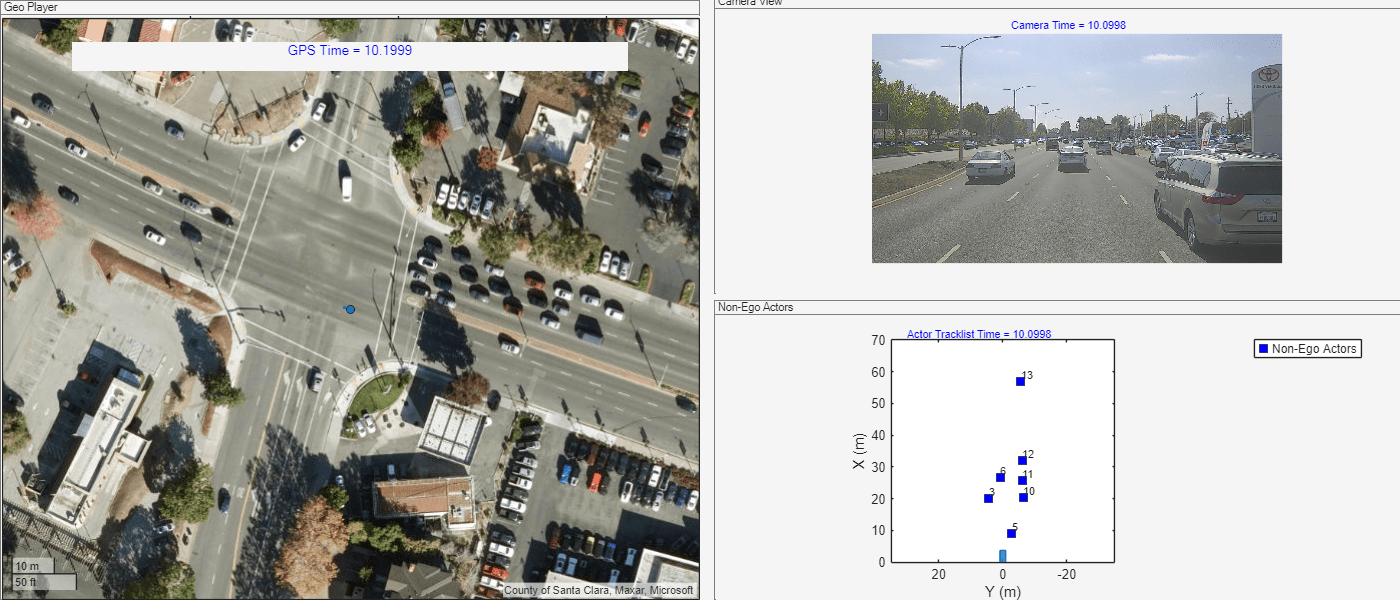

View Sensor Data in Single Frame

Display all the sensor data in a single frame to understand the time offset of the sensor data, which can inform requirements to crop and synchronize sensor data.

View the timestamp-normalized GPS data, camera data, and actor track data by using the helperViewMultiSensorData helper function. Observe that the camera data and actor track data matches for each timestamp, while the GPS data has a time offset.

fh = figure(Visible="on",Position=[0 0 1400 600]); % First plot: Geo Player geoPlot = uipanel(fh,Position=[0 0 0.5 1],Title="Geo Player"); geoPlayer = geoplayer(gps.Latitude(1),gps.Longitude(1),19, ... Parent=geoPlot,HistoryDepth=Inf,Basemap="satellite"); gpsTimeLabel = uicontrol(Style="text",Parent=geoPlot, ... Units="normalized",Position=[0.1 0.9 0.8 0.05], ... FontSize=10,HorizontalAlignment="center", ... String="GPS Time = ",ForegroundColor=[0 0 1]); gpsPlotData = {gps,geoPlayer,gpsTimeLabel}; % Second plot: Camera View camPlot = axes(uipanel(fh,Position=[0.51 0.51 0.5 0.5],Title="Camera View")); camPlotData = {cam,camPlot}; % Third plot: Non-Ego Actors hPlot = axes(uipanel(fh,Position=[0.51 0 0.5 0.5],Title="Non-Ego Actors")); bep = birdsEyePlot(XLim=[0 70],YLim=[-35 35],Parent=hPlot); actorListPlotData = {actorList,bep}; skipFrames =10; helperViewMultiSensorData(gpsPlotData,camPlotData,actorListPlotData,skipFrames)

Crop Normalized Sensor Data

To generate scene and scenarios using Scenario Builder for Automated Driving Toolbox™ support package workflows, all sensor data must possess a common range of timestamps.

Crop the GPS data, camera data, and actor track data into a common range of timestamps by using the crop object function.

Visualize the timestamps of the cropped GPS data, camera data, and actor track data by using the helperViewMultiSensorTimes helper function. Observe that the timestamps of all cropped sensor data are in the range of 10 to 15 seconds. However, the GPS data has a higher sampling rate compared to the camera and actor track data.

cropRange =  [10 15];

crop(cam,cropRange(1),cropRange(2))

crop(gps,cropRange(1),cropRange(2))

crop(actorList,cropRange(1),cropRange(2))

helperViewMultiSensorTimes(gps.Timestamps,cam.Timestamps,actorList.Timestamps)

[10 15];

crop(cam,cropRange(1),cropRange(2))

crop(gps,cropRange(1),cropRange(2))

crop(actorList,cropRange(1),cropRange(2))

helperViewMultiSensorTimes(gps.Timestamps,cam.Timestamps,actorList.Timestamps)

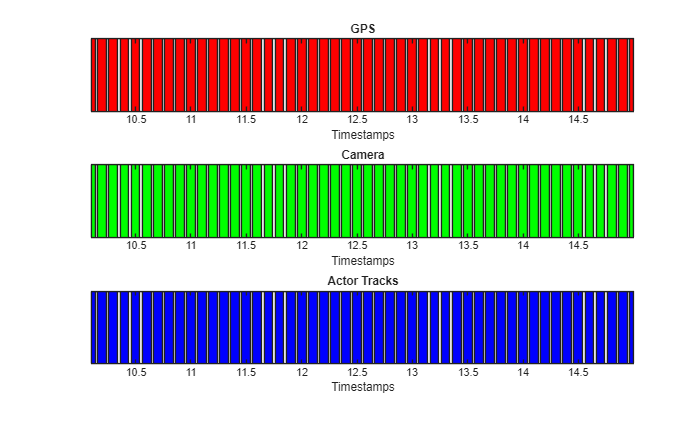

Synchronize Cropped Sensor Data

To generate scene and scenarios using Scenario Builder for Automated Driving Toolbox™ support package workflows, all sensor data must be time synchronized.

Synchronize the GPS data and actor track data with reference to the camera data by using the synchronize object function.

Visualize the timestamps of the synchronized GPS data, camera data, and actor track data by using the helperViewMultiSensorTimes helper function. Observe that the GPS data, camera data, and actor track data all have the same number of samples.

synchronize(gps,cam) synchronize(actorList,cam) helperViewMultiSensorTimes(gps.Timestamps,cam.Timestamps,actorList.Timestamps)

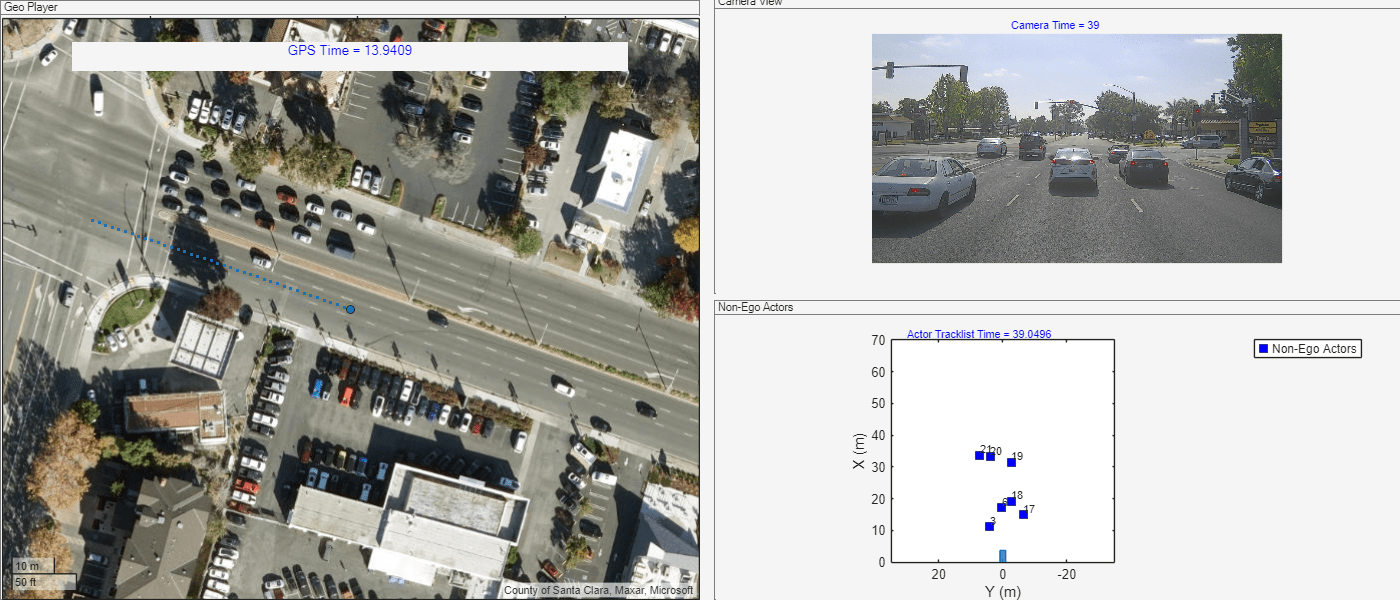

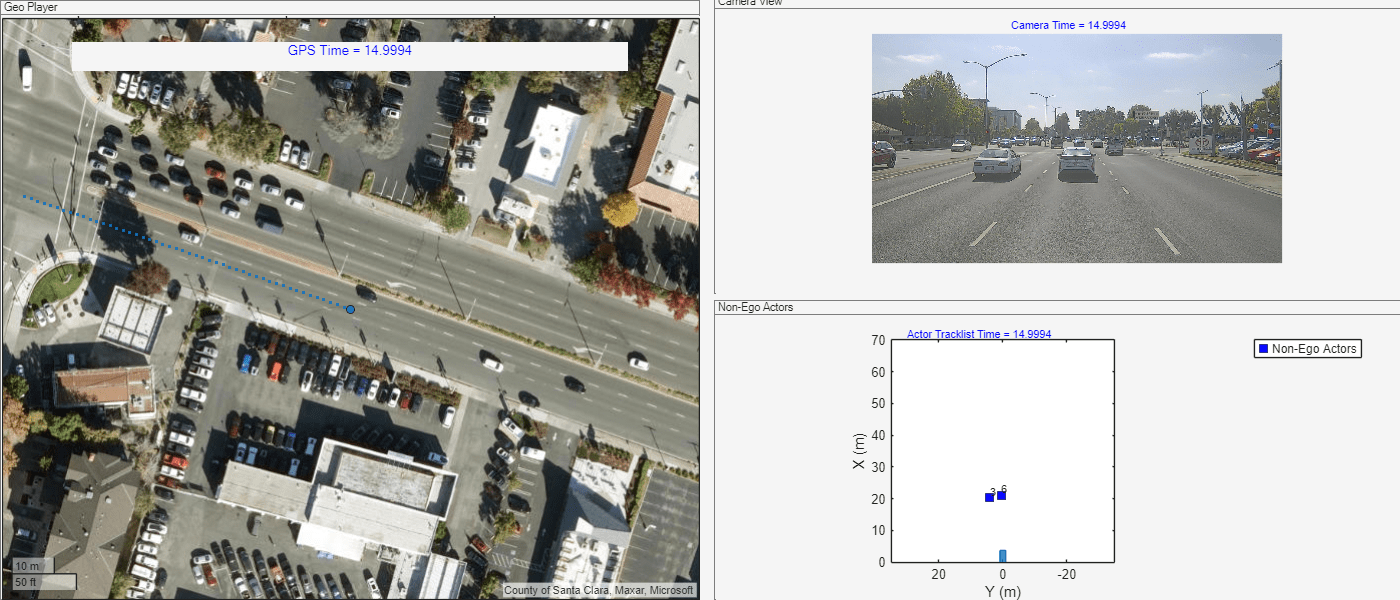

Visualize the timestamp-normalized, cropped, and synchronized GPS data, camera data, and actor track data by using the helperViewMultiSensorData helper function. Now, the GPS data, camera data, and actor track data matches for each timestamp.

fh = figure(Visible="on",Position=[0 0 1400 600]); % First plot: Geo Player geoPlot = uipanel(fh,Position=[0 0 0.5 1],Title="Geo Player"); geoPlayer = geoplayer(gps.Latitude(1),gps.Longitude(1),19, ... Parent=geoPlot,HistoryDepth=Inf,Basemap="satellite"); gpsTimeLabel = uicontrol(Style="text",Parent=geoPlot, ... Units="normalized",Position=[0.1 0.9 0.8 0.05], ... FontSize=10,HorizontalAlignment="center", ... String="GPS Time = ",ForegroundColor=[0 0 1]); gpsPlotData = {gps,geoPlayer,gpsTimeLabel}; % Second plot: Camera View camPlot = axes(uipanel(fh,Position=[0.51 0.51 0.5 0.5],Title="Camera View")); camPlotData = {cam,camPlot}; % Third plot: Non-Ego Actors hPlot = axes(uipanel(fh,Position=[0.51 0 0.5 0.5],Title="Non-Ego Actors")); bep = birdsEyePlot(XLim=[0 70],YLim=[-35 35],Parent=hPlot); actorListPlotData = {actorList,bep}; skipFrames =1; helperViewMultiSensorData(gpsPlotData,camPlotData,actorListPlotData,skipFrames)

Further Exploration

In this example, you explored how to synchronize multiple types of sensor data in preparation for using that data for scenario generation. You can apply similar approaches to timestamp normalization, cropping, and synchronization for other sensor data such as lidar data and trajectory data. Note that to generate scene and scenarios using Scenario Builder for Automated Driving Toolbox™ support package workflows, all sensor data must possess a common range of timestamps and must be time synchronized.

References

[1] Hesai and Scale. PandaSet. Accessed September 18, 2025. https://pandaset.org/. The PandaSet data set is provided under the CC-BY-4.0 license.

See Also

Functions

crop|synchronize|normalizeTimestamps|GPSData|CameraData|ActorTrackData|recordedSensorData