Test Planning and Strategies

You can use Simulink® Test™ to functionally test models and code. Before you create a test, consider:

Identify the Test Goals

Before you author your test, understand your goals. You might have one or more of these goals:

Simulation Testing

In cases where your test only requires a model to simulate without errors or to perform regression testing, you can run a simulation test. Simulation tests are useful if your model is still in development, or if you have an existing test model that contains inputs and assessments, and logs relevant data. For an example, see Test a Simulation for Run-Time Errors.

Requirements Verification

If you have Requirements Toolbox™ installed, you can assess whether a model behaves according to requirements by linking one or more test cases to requirements authored in the Requirements Toolbox. You can verify whether the model meets requirements by:

Authoring verify statements or custom criteria scripts in the model or test harness.

Including Model Verification Blocks in the model or test harness.

Capturing simulation output in the test case, and comparing simulation output to baseline data.

For an example that uses verify statements, see Test Downshift Points of a Transmission Controller.

Data Comparison

You can compare simulation results to baseline data or to another simulation. You can also compare results between different MATLAB® releases.

In a baseline test, you first establish the baseline data, which are the expected outputs. You can define baseline data manually, import baseline data from an Excel® or MAT file, or capture baseline data from a simulation. For more information, see Baseline Testing. To compare the test results of multiple models, you can compare the results of each model test to the same baseline data.

In an equivalence test, you compare two simulations to see whether they are equivalent. For example, you can compare results from two solvers, or compare results from normal mode simulation to generated code in software-in-the-loop (SIL), processor-in-the loop (PIL), or real time hardware-in-the-loop (HIL) mode. You can explore the impact of different parameter values or calibration data sets by running equivalence tests. For an equivalence testing example, see Back-to-Back Equivalence Testing. You cannot compare more than two models in a single equivalence test case.

In a multiple release test you run tests in more than one installed MATLAB version. Use multiple release testing to verify that tests pass and produce the same results in different versions.

SIL and PIL Testing

You can verify the output of generated code by running back-to-back simulations in normal and SIL or PIL modes. Running back-to-back tests in normal and SIL or PIL mode is a form of equivalence testing. The same back-to-back test can run multiple test scenarios by iterating over different test vectors defined in a MAT or Excel file. You can apply tolerances to your results to allow for value and timing technically acceptable differences between the model and generated code. Tolerances can also apply to code running on hardware in real time. You cannot use SIL or PIL mode in Subsystem models.

Real-Time Testing

With Simulink Real-Time™, you can include the effects of physical plants, signals, and embedded hardware by executing tests in HIL (hardware-in-the-loop) mode on a real-time target computer. By running a baseline test in real-time, you can compare results against known good data. You can also run a back-to-back test between a model, SIL, or PIL, and a real-time simulation.

Coverage

With Simulink Coverage™, you can collect coverage data to help quantify the extent to which your model or code is tested. When you set up coverage collection for your test file, the test results include coverage for the system under test and, optionally, referenced models. You can specify the coverage metrics to return. Coverage is supported for Model Reference blocks, atomic Subsystem blocks, and top-level models if they are configured for Software-in-the-Loop (SIL) or Processor-in-the-Loop (PIL). Coverage is not supported for SIL or PIL S-Function blocks.

If your results show incomplete coverage, you can increase coverage by:

Adding test cases manually to the test file.

Generating test cases to increase coverage, with Simulink Design Verifier™.

In either case, you can link the new test cases to requirements, which is required for certain certifications.

Test a Whole Model or Specific Components

You can test a whole model or a model component. One way to perform testing is to first unit test a component and then increase the number of components being tested to perform integration and system-level testing. By using this testing scheme, you can more easily identify and debug test case and model design issues. If you want to obtain aggregated coverage, test your whole model.

Use a Test Harness

A test harness contains a copy of the component being tested or, for testing a whole model, a reference to the model. Harnesses also contain the inputs and outputs used for testing. Using a test harness isolates the model or component being tested from the main model, which keeps the main model clean and uncluttered.

You can save the harness internally with your model file, or in an external file separate from the model. Saving the harness internally simplifies sharing the model and tests because there are fewer artifacts, and allows you to more easily debug testing issues. Saving the harness externally lets you reuse and share just the harness, and clearly separate your design from your testing artifacts. The test harness works the same whether it is internal or external to the model. For more information about test harnesses, see Test Harness and Model Relationship and Create a Test Harness.

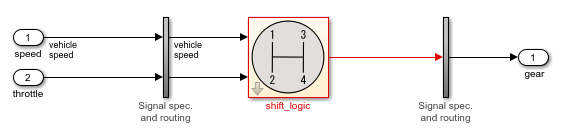

The figure shows an example of a test harness.

The component under test is the shift_logic block, which is copied

from the main model during harness creation. The copy of the shift_logic

block is linked to the main model. The inputs are Inport blocks and the

output is an Outport block. The vertical subsystems contain signal

specification blocks and routing that connects the component interface to the inputs and

outputs.

Determine the Inputs and Outputs

Consider what input signals and signal tolerances to use to test your model and where to obtain them from. You can add a Signal Editor block to your test harness to define the input signals. Alternatively, you can define and use inputs from a Microsoft® Excel or MAT file, or a Test Sequence block. If you have Simulink Design Verifier installed, you can generate test inputs automatically.

For the output from your test, consider whether you want the test details and test results data saved to a report for archiving or distribution. You can also use outputs as inputs to Test Assessment blocks to assess and verify the test results. For baseline tests, use outputs to compare the actual results to the expected results.

Optimize Test Execution Time

Ways that you can optimize testing time include using iterations, parallel testing, and running a subset of test cases.

When you design your test, decide whether you want multiple tests or iterations of the same test. An iteration is a variation of a test case. Use iterations for different sets of input data, parameter sets, configuration sets, test scenarios, baseline data, and simulation modes in iterations. One advantage of using iterations is that you can run your tests using fast restart, which avoids having to recompile the model for each iteration and thus, reduces simulation time.

For example, if you have multiple sets of input data, you can set up one test case iteration for each external input file. Another way to override parameters is using scripted parameter sweeps, which enable you to iterate through many values. Use separate test cases if you need independent configuration control or each test relates to a different requirement; For more information on iterations, see Test Iterations.

If you have Parallel Computing Toolbox™, you can run tests in parallel on your local machine or cluster. If you have MATLAB Parallel Server™, you can run tests in parallel on a remote cluster. Testing in parallel is useful when you have a large number of test cases or test cases that take a long time to run. See Run Tests Using Parallel Execution.

Another way to optimize testing time is to run a subset of test cases by tagging the tests to run.

For more information on improving and optimizing model simulation, see the Optimize Performance section under Simulation in the Simulink documentation.

Use the Programmatic or GUI Interface

To create and run tests Simulink Test provides the Test Manager, which is an interactive tool, and API commands and functions you can use in test scripts and at the command-line. With the Test Manager you can manage numerous tests and capture and manage results systematically. If you need to define new test assessments, use the Test Manager because this functionality is not available in the API. If you are working in a Continuous Integration environment, use the API. Otherwise, you can use whichever option you prefer.