Explainability in the Age of Regulation

From the series: Using MATLAB in Finance 2020

Big data and AI represent an opportunity to improve the bottom line of organizations. By building advanced analytics and machine learning models on top of large repositories of data, business decisions can be vastly improved. Some tasks that stand to gain the most from such improvements include predicting fraud, determining trading opportunities, improving the customer experience/journey, and even forecasting which employees are at risk for switching companies. However, implementation at scale and with compliance in mind is daunting to most financial institutions at all levels of AUM.

In this session, we will provide insight on how to build AI models quickly, keeping transparency, scalability, and model risk management at the heart of the discussion.

Published: 17 Nov 2020

Hi, everyone and welcome to this MathWorks event on explainability in the age of regulation. I'd like to introduce Marshall Alphonso, a senior application engineer who has a wealth of financial experience, actually working in the finance industry as well as working with MathWorks. Marshall take it away.

Thanks, Bob. So what we're going to talk about today is artificial intelligence in the age of model explainability. That's a very, very fascinating topic. Just kind of save some bandwidth, I'm actually going to turn off my video for the rest of the presentation.

And during this presentation we're really going to get into the concept of model explainability. That's a very, very difficult concept right now that I've seen in the years I've been working with the Tier 1 banks and working with a variety of financial institutions, people interpret the word model explainability.

The context in which this presentation's going to be presented is model explainability is around how do you communicate with your executives around what's inside the black box. That would be one big theme of this presentation, as opposed to using sort of like financial data science jargon. And the other is how do you think about it within the context of governance, the SR 11-7 the BCBS 239, how do you think about it in terms of governance and how do you get it through production?

The basic agenda is going to go through what is artificial intelligence. I'm really going to kind of look at the landscape of artificial intelligence from the 1950s, all the way through today. We're going to then touch on how do we explain AI models in sort of the traditional sense with standard machine learning type explainability, whether it's model agnostic or model specific and why is it important? A lot of us, and we explain when we start doing work on deep learning type explainability or machine learning type explainability, we forget the why, as to why it's important to articulate artificial intelligence and what's inside the black box.

I'm going to touch on a couple of key metrics. The one I particularly like and have used quite heavily is the SHAPley and so it's actually had a lot of success and I think a lot of different groups are looking at SHAPley as a way to kind of explain things. It's little more expensive than the traditional LIME but that's an area we will touch on as we kind of go through the presentation.

To deepen our explainability concepts the one area of work that we've been doing at MathWorks with our Tier 1 partners, Tier 1 bank partners, is really kind of building custom metrics like leveraging things like auto differentiation, really getting into sort of gradient changes within these very complex and deep learning networks, adding a preamble to it, adding a postamble to it and really being able to explain things in a traditional sense and then coupling that with SHAPley.

And then finally we'll just touch on, quickly at the end, explainability in practice. Typically what happens when you start thinking about production, when you think about governance teams and SR11-7 is one of the popular governance standards out there that we are trying to figure out how do we not just build a model but build a model with governance in mind. And so we can communicate with our governance professionals as to what's going on instead of black boxes and prove to them that we really understand that this model is not just going to work in sample but it's going to work out of sample as well.

So when we start off with AI machine learning and deep learning there's many areas of AI, many areas of like finance or even just engineering and science in general, that benefits from sort of AI machine learning and deep learning. And as you can see here, this really started in the 1950s where-- I'm going to turn on my Zoomit here. So in the 1950s you can see that people started talking about reasoning, perception, knowledge representation, sort of the philosophical ideas behind how we could potentially apply AI.

And you can think of AI as sort of like broad bucket term that's used for sort of any kind of algorithm out there. The way I like to think about it is I like to think about machine learning and NLP, which came out the 1970s and even earlier in some cases, as sort of like a subset of practical algorithms that do things that customers or clients need.

For example fraud detection, text to speech, sentiment analysis, document classification, trading, et cetera. There's a small subset or actually a subset called deep learning, which leverages specialized hardware and you're going to see a little glimpse of that today, with GPUs and that's used in things, places like self-driving cars, et cetera.

There's an extra area that's really coming to the forefront, especially in finance and that's reinforcement learning. So I like to bucket most machine learning, AI algorithms into either unsupervised, supervised and I'm going to throw in this extra category right here, which gained a lot of popularity with the AlphaGo. So if anyone's interested in that area just reach out and I'm sure we can give you some interesting caveats or even show you some demos around that space that we're working on.

So let's start with the first question here, how do we explain AI models and why is it important? Before getting into it I want to kind of create the stage, set the stage right here when we start thinking about explainability, the way I think about explainability is the same way that people thought about explainability off their quant models in the 1970s when Black-Scholes And when you have a variety of like mean variance optimizers and a variety of quantitative models coming out, copula, things like that, that people would leverage for explaining the correlation in our markets, to explain and capture the dynamics of state transition due to regulatory impacts.

So when we were trying explain those we tried to translate those into explainability metrics like Sharpe ratios and a variety of key sort of metrics. But most people didn't get it at that time so we had to explain it in a language an executive could understand or explain in a way that the governance teams that approve our trades. In a similar vein explainability for AI is we're trying to find the right metrics and we're trying to find the right language for communicating with our governance teams.

So let me start with a very fundamental question here before diving into it, what is the modeling efficiency of data science teams? And I think this is going to set the context for the entire presentation. So according to one of our Tier 1 banks, 95% of the models coming out of the data science team do not end up in production. So I'm going to let that sink in for a second.

So based on work I've been doing with a Tier 1 bank, it's a real challenge for a lot of data science teams. They pump out a lot of AI models and the question then comes down to is how do we get those into production? How do we get those through the governance teams? How do we actually use that to have a business impact? And a lot of data science teams don't worry about the business impact it's going to have. And that's where MathWorks has spent a lot of time is trying to figure out how do we empower our data science teams. How do we empower executives to leverage artificial intelligence with business impact in mind.

So the efficiency, what we found is so low primarily because they're not able to explain sort of the data. There's a lot of challenges with in sample versus out of sample. We've seen a lot of challenges around compliance, the governance the second line of defense at the banks. They're not able to approve the black box model.

The great example is in the credit risk space, most of us are comfortable with approving logistic regression as the baseline model for credit risk but then as soon as we start talking about support vector machines, as soon as we start talking about deep learning, things fall apart it's very, very hard to communicate that. So at MathWorks one of the things we're working on is trying to figure out how do we tie the mathematics of the traditional logistic regression, all the way through the chain to empower our teams on the credit teams to be able to communicate with our compliance group.

Explaining models to executives and governance groups is a big challenge. So when you talk about artificial intelligence models they appear like black boxes. But what we're going to see hopefully through this presentation is there's a lot we can do to unwrap that black box, look inside and basically break it down into sub components and say, here's why the model is doing what it's doing. Or here 10% of my explainability is attributed to this sub component of my black box. Which means the remaining 80%, 90%, et cetera can be attributed to standard factor models.

Another big challenge about efficiency is maintainability challenges. When you build an AI model you can see I'm going to use a specialized piece of hardware, it turns out the way The MathWorks is kind of building our deep learning type infrastructure, the developers of The MathWorks are even, because we're working from home, they're actually testing out the algorithms on CPUs, they're using our standard hardware. So from a scalability and maintenance standpoint, it's actually becoming much, much easier to be able to scale out your models.

And then finally the last piece right now on efficiency challenges is not just maintaining these AI models from a governance standpoint, from a monitoring standpoint, but then how do you scale up the cloud? And we also have a lot of functionality that we're making it easier and more seamless to be able to push out your models into production environments that are not cloud based.

All right, the focus of this is really sort of like finding those metrics around explainability and maybe starting the conversation around developing the language of explainability so that you can communicate with your executives. So starting at the top let's start with some of the key metrics right here and there are two big buckets of explanation methods that are popular and we're going to see those in MATLAB in a second, is their model specific ones, such as the explainable neural networks, a variety of model specific ones around deep learning, there are model specific ones around support vector machines, et cetera. Some of the more agnostic ones to what the model definition is are things like predictor importance, partial dependence, LIME, SHAPley, et cetera.

And so at a high level these are sort of the key explainability metrics but I'm actually going to take it a step lower in my first example and I'm going to say you don't even need some of the more sophisticated explainability. We can start with the basic confusion matrix. We can start with a raw curve. We can start with the ways of just looking at our data and using visual tools to be able to drive insight into why our model is doing what it's doing.

So let's actually switch over and I'm actually going to start with a very simple example to illustrate this concept of explainability so that we're all on the same page. So what you're seeing here is I've just loaded in some data into MATLAB. You can see here I have working capital to total assets, I have retained earnings to total assets, a column of that. Have some other key metrics around these different bonds. Every single row represents a different bond and I have a corresponding rating of that bond. So for example these metrics right here correspond to a BB rating on the bond.

The first question I might try to answer from a retail banking perspective is can I create my own classification? Sometimes these bonds are not provided with classifications or some of us don't trust rating agencies or are required by the mandate of our firm to actually do our own internal validation of these, coming up with our own rating itself. So with that in mind, we would try to build a model that maps these variables, these numbers to try and predict the rating.

It turns out The MathWorks has been in the business of building explainability for a very long time. That's sort of our bread and butter. We want to try and make our mathematics as transparent as possible. And so with that in mind, we've built a variety of point and click tools but still giving you the flexibility to generate code from there.

So there's a variety of popular tools, Classification Learner, you'll see the Deep Network Designer, for providing some sort of insight around visually designing your networks as opposed to a lot of the code that ends up happening at a lot of these data science teams. And you're going to see that you have the ability then to be able to generate code and push that out to production.

Well, let's start with a simple one, let's go and start with classification. Classification is the requirement, of one of the requirements for using Classification Learner is that you're-- the output response, it has to be a discrete variable. In my case, I check that box because a BB, a A, there's nothing in between these that would give us sort of a continuous spectrum. It's either a AAA, a AA, a A, et cetera.

Let's open up our Classification Learner app. And here is our Classification Learner app. And the way this application works is the easiest way to kind of learn how to use our applications is just move from left to right on the tabs and that's a very easy way for, even for myself when I'm learning a new app, I like to kind of move from left to right kind of like reading a book and starting my process right here.

So the first thing I do is I create a new session, choose my data set. So in this case only one variable in my workspace, which is the variable I showed you. I'm going to pick which is my target variable, my response variable. And in this case, the rating, the AAAs, the AAs, the BBs, that's my response variable. And the predictors, which you're seeing down here, are the ones that I believe can help me predict what rating I'm dealing with.

The second step is the validation. So when you're building out a validation right here we have cross validation, hold that validation, no validation. So there's a variety of different validation techniques that are available for us to leverage. Whether it's a cross validation, which is a more robust way of protecting against overfitting or if you have your own validation techniques to ensure out of sample performance of your AI model then you can pick the no validation.

I'm just going to choose cross validation, click on start. And automatically the classification will unwrap, MATLAB is already building explainability and visualization into our capabilities. So this was a very powerful thing. When I was working at a fund here in New York, I was able to quickly take this up to the chief risk officer or the chief investment officer, show them a scatter plot and you can see here without looking at much detail, I can say that I'm plotting my working capital to total assets against retained earnings to total assets, Where I have anything below 0 on the retained earnings to total assets, anything below 0 on the working capital total assets, appears to have a CCC rating. So I don't need to any AI, this is just an indicator right here. Similarly the AAAs they seem to really like to sit up here above a certain sort of threshold.

And this gets me some sort of intuition on the data itself, right. So you can actually hover above the data points and get a feel for things. You can actually click on things, remove them, put them back in, you can remove the center area and really kind of like figure out what those classification steps are. And this little visual tool is unbelievably powerful just to start generating insight around it.

The next big step in kind of explaining my models is saying let's first build a model. So I'm going to look for a variety of different models right here. And sometimes when I'm trying to explain these to the executives I may have to dig through a lot of online documentation but what we've done is if I'm using linear discriminant for example, a starting point for explanation is this hover above the model itself, fast, easy to interpret, discriminant classifier, creates linear boundaries between classes.

OK easy to understand, easy to communicate. It gives you language that you can leverage. And that's one of the big things about these tools for me personally when I've been using them is they really empower me to be able to quickly articulate the actual classification separations within my discrete classifications here.

But say I didn't want to try to pick an algorithm, I'm just going to get a quick feel for is there predictability in my data set itself? Because sometimes you might pick a random set of predictors and my out of sample before, I can over fit to the noise and out of sample we don't do a great job. So one of the options here is all quick to train and I'm going to click on train and I'm already automatically using parallel computing, so you'll notice that I have four cores a laptop, so I have four cores is automatically training four at a time.

So without any additional work I can immediately see that my medium tree is about a 73% accuracy right here. But there's a deeper insight to be gained than just saying, OK, my medium tree was 73%. Most data scientists are like oh, but you don't have control over sort of the features that are available. Well you do.

So if I wanted to create a template right here I could choose advanced tuning, I can choose parameters, I can say maximum number splits, let's increase that to actually let's switch the split criteria to tunings rule and it creates a template right here with the split criteria adjusted to tunings rule. And I click on train it's going through the training process. And you can see here this new model with the tuning rule actually beat out all the other models.

That's great. But one of the keys here in explainability is you have to understand the business problem we're trying to solve. My goal is not just to target improving accuracy, my goal is really to help me identify from a mandate perspective, which bonds am I able to trade. And so there are certain mandates for example, for investable grade bonds that I may want to take a look at. That's where some of these other validation techniques start becoming very, very useful.

So one of the most popular ones is the confusion matrix. I can click on confusion matrix. And I can immediately see that for investable grade bonds I can get a sense of the percentage rating right here. So I can actually turn on true positive rate and I can actually see that 93% of my AAA bonds were correctly classified as AAA. 81% were correctly classified as a AA, 63% were classified as a single A. So you immediately get a sense of sort of the spread around the diagonal itself. And that's a good kind of way of getting a feel for how robust is your algorithm to noise, to random data. So maybe you need to do a better job pre processing your data.

One other thing that's important is once you are building your model you have the ability to export the model or generate a function. So I'm just going to show you that last piece. From a transparency standpoint and auditability standpoint, using a tool is great, but we're all doing the same thing. I noticed that all the data scientists that copy and paste code all over the place and that just creates a lot of model risk and a lot of model integration because if one person makes a mistake you're talking about all of Wall Street making a similar mistake. And we saw that happen with sort of the assumptions around the copula and the subprime mortgage crisis.

So one of the keys here is a lot of our models we're actually adding explainability into directly in our documentation. So you can see here that without doing much, it's auto generated code. I didn't write any of this code and it's automatically telling me what this code is actually doing. It's saying all right, it's performing a cross validation of this section right here. It's computing a validation and finally computing a validation accuracy.

So you can see here you have complete control, including the parameter choices that you're making, you get control over here off the parameter choices. I'm going to save this. And I'm going to use this so now if you have some new data, you can actually do this. I'm going to create a new model right here, train classifier. Where I'm going to feed in, this is the auto-generated code I'm going to feed in my data, generate my model. And I'm going to look at the methods available on this model. So you can see here there is a classification tree and we've noticed that everyone kind of does the same thing even in terms of like standard follow up methods once you build the model then what the next step in the workflow.

Well one thing I'd like to do is I'd like to maybe view the model. So I might try that. So I might take my model itself, put on my graph, my mode of graph, hit enter. And without much effort I'm already sitting and I'm able to fit this into a PowerPoint slide and really communicate that the factors really driving the black box model is the market value of equity to book value total debt.

I can see that-- now there was a really famous quant who said that when you look at the traditional factor models everything is baked into the epsilon term at the end but in this case right now with the decision trees, one of the powerful capabilities is it does not make assumptions on the interrelationship between terms. And so you can actually take your-- you can take a look at these decision tree leaves and you can see that it's naturally mixing market value of equity and retained earnings. So that is a relationship that may not be apparent if you just look at straight betas on a traditional linear model. And we know that factors have a tremendous amount of interrelationship and mixing that do occur naturally and so this is a nice way to kind of communicate a decision tree as sort of the next step up from what you're trying to do.

The other thing you may want to also look at is predictor importance. So predictor importance is all about for example communicating what is important on, let's see, the predictor importance on the model itself. Oops and I've misspelled it. Yep, there you so. And so you can see here on the predictor importance that the second factor is important and the fourth factor is extremely important.

So I like to go back and forth between the apps. So if it told me the fourth factor was important, I could actually come in here one, two, three, four, so the market value to equity, to book value total debt, as we saw in the decision tree was the most important. So I might be interested in removing that and understanding how much of a loss in information there is by removing a factor.

And you can see without having to program this I can easily remove a single factor, click on train and I can see my accuracy dropped by 13.4%. Then I can take a look at the buckets and I can see which bucket is being affected most by just comparing and clicking on the two of them. So you can see there's a pretty massive drop in performance in the AA section right here or even in the A section. So you go from 80% to half of that on the double AA.

So by communicating to compliance groups that you're really diagnosing what's happening in sample versus out of sample, you're starting to really communicate in a very powerful story that becomes very hard to refute. And it shows that you're kind of thinking about the data, you're really thinking about the challenges of working with these models. If you have any questions, feel free to post it in the chat and I can follow up at the end.

All right, so we got a little glimpse into the basic explainability but let's ask a simple question, why is explainability so important and how do we frame the question of explainability? So here's a really interesting case and this is a very common situation in the industry but there was a credit card that was put out by Apple, it turned out and got some regulatory scrutiny and it showed that there was bias against women in the actual showcasing of this credit card. And as you can imagine, this came up because the fact that the data was really heavily biasing in the algorithm. The data seemed to be skewed very heavily for a particular type of bias.

It becomes less obvious and becomes less transparent when you're dealing, when things are not as black and white in terms of where the bias might be coming from. In this case it was due to gender. In the cases of the Tier 1 banks I'm working in, it's actually coming from mislabeling.

So let's talk now a little bit more about what many of you have come to the session. Many of you may be familiar with our tools but I want to try and dig a little deeper and show you there is a lot of depth to explainability. So let's take this simple example right here and ask a very simple question. Now we saw AAA rating, a AA rating, let's look at a loan, a retail loan right here. And it doesn't really matter what the loans are or what the AI problem is, these metrics are very powerful and applicable to a lot of different situations. So if I can switch over, switch out of this.

All right, let's take a look at this algorithm right here. So I put a little explainer application on the front end, so I built this in MATLAB just to kind of be able to communicate the problem. I'm going to expand this out and I'm going to load in some data. In this case, you can see I have different customers. I have the customer age, I have the time, I have the address, residential status, homeowner, tenant, et cetera. I have employment status, employed, unknown, et cetera. I have the customer income, time with the bank and I have a corresponding status.

So we have a pre-built model. So as opposed to a model that we're training like I did in the last example, I'm actually going to just load in a pre-built model. And so the pre-built model basically produces the prediction so like a score. So many of you might be familiar with like a FICO score, and if I looked at this individual right here depending on which FICO or depending on which score you're talking about, he has a corresponding rating of 700.

So now the question is, why did he get a score of 700? Because so in the credit card example that I showed you with Apple, I couldn't explain to you why there was a bias towards the female gender. And in this case, the question really comes down to is can I explain it in terms of all the other bias, all the other factors that are sort of drivers in this prediction?

So there's several different metrics that we can run sensitivities on and one of the popular sensitivity metrics that we can use is ICE. I'm actually going to run all three and then we'll get that out of the way. And I'm going to choose the predictors as all predictors. So the conditional expectation on the predictor itself is going to be a way of kind of getting a sensitivity to these individual predictors. So let me run this, I have parallel computing turned on so it's going to take a few seconds. We can run some of the Monte Carlo simulations to be able to estimate a line and then SHAPley.

There you go. All right, so this example right here, this is what individual conditional expectations look like. Essentially you have the predictor on the x-axis. And you have the response variable, in this case the actual score, on the y-axis. So you can see that for this particular AI model customer income seems to have sort of that, it's a non-linear effect. As your customer income goes up, so does your score.

But you can see it's not a linear one so you can see there's actually a curvature that's been captured by the model itself. Even though this is a highly nonlinear model you're actually able to explain why this model is-- what the sensitivity of this model is relative to these different sort of predictors. You can see time with the bank and it's kind of like the opposite of customer income is almost like an exponential curve right here. Account balance kind of apparently goes down with increasing balance, et cetera. When we pull up there's a couple of questions that came up and we'll start dealing with them during the breaks.

All right, so let's look at some of the other metrics. So we looked at ICE which basically is your-- let me pull it up here visually-- is the partial dependency plot and it kind of gives you a way of looking at the actual partial dependence on individual contributors. So this is the one I showed you just now, individual conditional expectation. It's the same idea as PDP but it separates the lines for each data point as sort of the average that you're seeing. And that's the one where we saw the sensitivities of the score in this case relative to the actual predictor itself.

Another very popular metric-- I'm going to start with a visual-- is LIME. This is the one you might hear about when people talk about interpretability and explainability, there is a clear articulation around people start using LIME right away and it's a way of-- and it's very popular, especially in image processing example. I'm going to show you why and I think that's the easiest way to kind of articulate it. It's basically trying to understand if you have a set of factors then the question is, how much does that factor contribute to the overall explanation of your model itself?

So how local interpretable machine agnostic explanations work is it subdivides your component and really looks at it sort in a localized fashion and tries to understand how each of those correspond to the overall value. How much does it contribute to the overall value of explaining in this case why if I saw the eye right here or that big section over here, I can say maybe this is a frog. Our brains are able to kind of interpret that as possibly being a frog without seeing anything else. And that's sort of this local interpretable machine agnostic explanations on how they kind of approach it.

So let's take a look at, we saw the PDP ICE, let's pull up LIME right here. And so you can see LIME tries to say what's positively contributing, so you can see here is the 0 line, what's positively contributing to the explanation of this individual score. So this is not choosing multiple scores, this is choosing a single score and basically trying to explain why is the model actually producing and what it's producing. So you can see here time at the bank seems to be an important factor, residential status seems to be important, other credit cards, customer income seems to be important. Less important is employment status apparently according to this.

The problem-- and this is something that's very important to look at as a metric of how good is the explainability-- is the difference between the actual score, in this case, our score was 700 and what was actually produced, what was explained by our LIME model. And so you can see here there's actually a discrepancy. So this is not a very good way of explaining at least in this particular customer case the actual difference.

One of the other very popular metrics which will look very similar to this is SHAPley and I'm going to come back to that in a visual in second. SHAPley is a very famous way of looking at it from a game theory perspective, trying to explain why which model or which of the factors that are really driving this model. Time with the bank, it looks like relationships seem to be a very big factor in this model. And if you look at the score versus sort of expected you can see that the discrepancy is much, much less.

So this is actually a good thing for helping articulate to regulators why I'm getting a score of 700. According to this model I'm seeing that the time at the bank is very important, the residential status is important. It sort of like reinforces what was said before, employment status is not important.

Keep in mind this is localized, so when I showed you the original explanation at the beginning right here, I'll just pull that up just for clear articulation. This local explanation that you're seeing over here, color is in yellow, this local explanation that you're seeing over here are the LIME and the SHAPley type calculations that we were just showing you. Some of the more global ones are the predictor important, partial dependency plots.

All right, so LIME is pretty popular like I said, it's actually very popular in NLP and I'm am going to show you a glimpse into NLP because NLP is a little bit more difficult to articulate and I'm going to use that as a way kind of like wrap up the presentation as well. Now let's take a step back from sort of explainability metrics and I really like this example, it's a very, very powerful example that helps me, reminds me of explainability given data. And a lot of data science teams don't think about this, they're looking for more and more data but they're not thinking about sort of the business impact or the business intuition behind how the data is gathered.

So this example, there was a scientist I believe or a mathematician in the UK. His job was to try and figure out where do I put armor on this airplane. And these were the bullet holes that actually hit the airplane. And so most of us might say, well without really knowing statistics I would say maybe there seems to be a concentration around the wings maybe that there's a concentration around this area right here. Maybe we need to really reinforce those things and maybe some reinforcement right here.

The problem is we found out and this is what he identified, he said no, the place you put the armor is around the areas with no bullet holes, not around the place where there are bullet holes. The reason and the rationale for that is these are the planes that actually came back. So what we're looking at is data that has been gathered from planes that survived. And so if the bullet holes were actually hit over here that means the plane survived. And so they called this the graveyard of data and that's what we're really kind of putting ourselves into the mindset of, how do we articulate to our executives about the missing data as opposed to the data that we've seen.

So we talked about SHAPley and I wanted to just touch on this just a little bit. So with SHAPley this is a very famous equation right here. The original author, he won a Nobel Prize on his work on game theory showcasing how you can explain through actually incorporating and creating these coalitions of factors and trying to understand what happens when you withhold different factors from your model itself. And trying it from a game theory perspective, trying to see how is it actually impacting the overall value.

And so what you're seeing on the left hand side right here is the value function that we're attributing to individual factors. We're saying what's the value given a single factor, customer income, time at the bank et cetera. And so when we're starting to look at this and communicate with our governance teams we have a lot of formulations that have already been articulated for us so my recommendation when you're looking at, you're trying to understand don't just look at the code.

MathWorks does a really great job on communicating around the actual mathematics itself. So if I looked at the documentation, I'm going to pull it up for a second. And this is based on my experiences with governance teams, what we do is we go into an algorithm, let's pick, I'm going to pick an algorithm right here, let's go to statistics and machine learning, you can see there's a whole range of algorithms available, we'll just pick a simple one, regression.

Let's say I was trying to use, I don't know, generalized regression, let's choose a generalized linear regression right here. And I can take a look at some of the actual models that have been built, I can look at the topic areas and I can start looking at the actual analysis and the mathematics behind the actual explanation. And so MathWorks spends a lot of time. We have a phenomenal doc team that basically puts together a lot of articulation around why are the models doing what they're doing as well as giving you some of the intuition behind the actual model itself. In addition, what becomes very useful for articulation is we provide a lot of pre-built examples that allow you to run straight from the documentation. That may help communicate with your governance teams. And so let me pull this up.

All right, so let's take a step further here and let's actually challenge the concepts of explainability. And this is what we've been struggling with and working very hand in glove with the Tier 1 banks on doing. One of the big challenges sometime March of last year, one of the Tier 1 banks came to us and said they were working on a machine learning AI problem but their data science team produced an explainability, took about three months to produce that explainability and the accuracy that they came up with 70%, and they asked MathWorks, could we help them do better but with explainability at the forefront?

The executive team and the data science team had a very big problem on communicating between each other. And so you can imagine, we had to try and figure out how do we help bridge the gap between what the data science teams were doing and what we were doing in terms of our pre-built algorithms.

It turned out we were able to replicate the data science team in about two weeks with a 72, 73% accuracy. And then we were able to pump it up to about an 87% accuracy in a couple of months or a couple of weeks actually, with explainability around what features are really driving it. And I'm going to show you an example coming from the bank or coming from several banks actually around this explainability question.

So to start with I'm going to first introduce the concept of deep learning because it's going to be a basis for a lot of what we're doing here. So when it comes in deep learning there is a lot of different functionality available to use. I'm just going to pull up, let me pull this guy up here. And if I construct a network, let me just create a GoogLeNet right here. So this is just a pre-built network.

So one of the first things that you may want to do is you may want to take a look at our Deep Network Designer. This was a great way, I remember writing, I used write a lot of code when I started working on artificial intelligence many, many years ago. And I would write a lot of the neural networks, and build a lot of the neural networks by hand.

In this case, this is a really great tool to help get started on building your neural network. So you can see here I just used one of the pre-built models, GoogLeNet, and uses Network Designer. And you can see here, there's a lot of models that are pretty common in the industry like SqueezeNet, ResNet-50, GoogLeNet like I mentioned, et cetera, AlexNet, there's a variety of models that are available. You can just say open it up and you can get started that way. Or you can just stay from the workspace import the model that you have in, this case, I have 144 layer GoogLeNet network, takes a few seconds.

Yep. And so what we have here is a network with a complex structure to it. So this is completely movable, you can actually modify the parameters in this network, you can actually construct new networks out of it. And that's very common in the image processing space, they would take these complex networks as a transfer type learning situation where they'd then have the opportunity to take this network and apply it to different situations.

You have the ability with this Network Designer to be able to export and generate the code exactly like we did before and it actually writes out all the code for you, so you don't have to start from scratch. So if you wanted to do the handwriting yourself, that's great but visually this is a very powerful way for me to start my designs for some of the more complex networks. For some of the simpler networks I still start with the layering of the networks, in this sort of fashion right here. So you can see here, the layers are kind of laid out and all tied together to produce my layered graph. And then finally, I can actually plot and visualize this. So this is all code that basically constructs a 144 layer network.

In the interest of time, I'm going to switch over now to explainability and in this case right now, I'm going to pull up an example. I'm just using weather data for now but it really doesn't matter. So let's start with a weather report. I'm just going to pull that up. And the basic description of the problem is given a piece of text from this case, heat indices of 110 degrees or greater or higher, this is a supervised learning problem. So what I want to try and figure out is given this can you predict the actual, which is given to us, so you can see here excessive heat is given to us.

One of the questions I noticed was how do you explain things in terms of bias, like what's really driving the bias off your network? And that's exactly one of the big challenges in your labeling process. So the place where we see a tremendous amount of bias being introduced is the labeling itself could have mistakes in it.

And so what we did for this bank and what's being leveraged in some of the other banks as well is this ability to first build a classifier. So in this case, I built a neural network classifier but then you have the ability to really dive down and say, OK, why is there a discrepancy on some of these? So I can see that according to the model, according to the actual, this description right here, snowfall amounts of 15 to 24 inches were reported across Stafford County. The actual was winter storm. My classification was winter weather.

So even just at the top level my classifier is producing something very similar but maybe there's too many labels. But what we did was we're able to take that a step further and really help drive like why is my model producing a certain amount of articulation around why I'm choosing a specific classification. Now if anyone's interested in really getting into the details of it because this gets into to the actual deep learning capabilities that we have, I'm happy to really get into it.

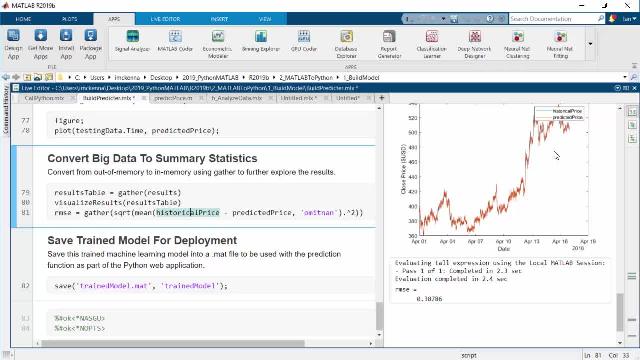

But I'm actually going to pull up one example and start, use that as an explanation, give you a glimpse into how do I start explaining deep networks. From this example right here I have a lot of actual financial data, a lot of the names have been scrubbed and the data has also been scrubbed. So that way we can kind of really communicate. Oops, let me pull up the news here. Yeah, perfect.

So you can see here there is some news around this fund x. And I've actually intentionally there's a lot of mixed data in here. So it's very-- there's no way to kind of reverse engineer this, in this case there's 144,000 records where you have different sort of like data that's being constructed and you can see that this is based on real data. And the target we're trying to figure out is this high risk articulation or not? Is it a positive claim or is there a risk issue associated with the actual communication around the actual text itself?

And so to solve that problem I built a deep network and I'm just going to run it and I'm going to allow my deep network, I have a GPU connected over here, and it's going to go through and build a model that maps the actual training data to the target variable itself. In a second you'll-- I'm going to pull up a visual. I believe it's running, I just want to double check. And I already have run the whole thing. Oh, I apologize. It's not running because I've already run this before and so I'm going to actually have to delete my network itself. Try that again. Hopefully that deletes it. Stop this. OK so I deleted it. So I'm going to rerun it. Obviously just loads the prior network that I ran this morning before this session.

And so what you're seeing here is now the deep learning that we're training on the GPU. Here's a validation to accuracy. And that's the starting point for validating an out of sample. So you'll see I've set the validation frequency to on 50, so every 50 iterations you're actually going to revalidate now the sample. And so you can see in this particular example, the accuracy is extremely high. There's rationale for that, I just wanted to kind of ensure that I could provide you a quick run in, live but in actuality this actually works pretty well producing around like an 89% accuracy using the deep networks.

So there's a lot of really great articulation around communicating around deep networks. But in the interest of time, I'm actually going to switch over to the final piece right here and wrap up with this last thought. When you start talking about explainability, don't forget to think about the governance team, don't forget to think about the executives.

The language is extremely important. For us we work very, very closely, we study the SR 11-7 for example, along with all the other governance standards, BCBS 239, a variety of others, to try and really communicate to our governance teams. And so there's a lot that really comes into play when we start thinking about explainability and governance. And so with that I think there's a tremendous amount of opportunity.

So my recommendation is start thinking about how you want to use interpretability as part of your governance processes, how do you want to think about interpretability and key metrics to communicate with your executives. With that I'm going to jump over to a few questions.