Deep Learning Overview for Images and Video | Deep Learning Webinars 2020, Part 1

From the series: Deep Learning Webinars 2020

Learn how to use MATLAB® to exploit disruptive technologies like deep learning. You will see:

- Where deep learning is being applied in engineering and science and how it is driving the development of MATLAB

- How you can research, develop, and deploy your own deep learning application

- What MathWorks engineers can do to support your success with deep learning

You will also see demonstrations of technology such as:

- Automating ground truth image labeling

- Training and evaluating an object detector

- Generating optimized native embedded code

Published: 20 Oct 2020

So the aim with this session is to provide a clear view of where deep learning is being applied in engineering and science and how it's driving MathWorks development efforts, how you can research, develop, and deploy your own deep learning solution, what MathWorks engineers can do to help you support-- to help to support you achieve success with deep learning.

Deep learning is a key technology driving the current artificial intelligence megatrends. Its origins date back to the dawn of computing that evolved to include machine learning, which deep learning is now a subset of. Popular consumer applications of its use to include, speech recognition, using smart speakers, face detection for cameras on phones, and autonomous self-driving systems in automotive vehicles.

You may have also heard of some other mainstream applications of deep learning. But how many of them would you consider applicable to the engineering and science domains? One such example is deep learning object detection, which is where a trained deep learning detective will scan an image or a video frame for certain objects. It not only finds if the object is in the image, it will draw a bounding box around it as well.

This same technique is being used by Shell to locate tags that identify different machinery on location. By automating this process, this allows Shell to perform more efficient maintenance on their equipment.

And at MathWorks we are seeing applications developed with our software across multiple industries, such as in aerospace where Airbus is performing automatic defect detection via deployed model running on an embedded GPU, to automotive, where Denso is modeling, simulating, and deploying models for their complex engine control units or AC use, and to energy production, where Shell, once again, has developed another model but this time for earthquake detection, using signal processing techniques.

In research, papers have been published showing MATLAB being used to automatically predict cancer from complex medical images and converting brainwaves of ALS patients into words and phrases.

To help you understand how we got to where we are today, let's take a look at MathWorks evolution of deep learning support. Does anyone know when MATLAB first had the ability to train neural networks? Well, the answer is in the mid '90s, with the initial release of the Neural Networks Toolbox.

When did deep learning first come to MATLAB? A popular framework, MatConvNet, from Oxford Visual Geometry Group came out in 2014. But MathWorks first officially supported it in 2016. Since then we've been releasing a stream of major updates. You may notice that some of these updates relate to the broader area of deep learning. Others, like our apps, focus on productivity and some on deployment.

Many ask what's coming next in MATLAB. The answer is the field is constantly evolving. And, in general, this path is difficult to predict. However, you may see that each year the number of updates in MATLAB have increased over the year prior. MathWorks is committed to tackling this growing and ever evolving field through our increased development efforts.

One item I'd like to, especially, point out is that we now have over 200 examples across multiple domains that can help serve as a great starting point for users looking to apply deep learning in their area of expertise. Apologies if the video quality is a bit jittery. But here are a few examples of these capabilities in action. Beginning with deep learning detection, using YOLO v2 and semantic segmentation on video and images.

Next let's take a look at applications of deep learning and signal processing, where signals are being classified based on that time series content and recognized from their frequency domain content.

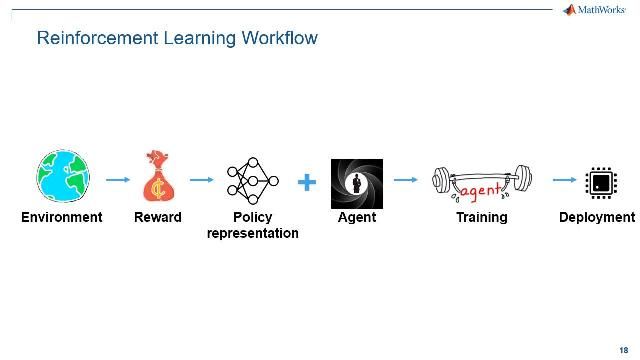

An emerging area is reinforcement learning, which is a type of machine learning that uses deep learning. It has been shown that it has the ability to solve some really hard control problems, such as teaching a self-driving car to navigate traffic or train a quadruped robot to walk. For those of you who have clicked on the registration link, you may have seen that there is a reinforcement learning webinar coming up. If you are interested in knowing more about reinforcement learning, I encourage you to register for that event.

So far, I've touched on how MATLAB is focused towards engineering and science workflows. But in addition, there are four more reasons on why to use MATLAB for deep learning. It also enables users to improve productivity by using interactive apps that expedite analysis and also generate reusable code, models to be deployed anywhere from embedded to cloud systems, interoperability with open source frameworks, TensorFlow and PyTorch, users to access support from experienced MathWorks engineers in development, training, and consulting.

Throughout the remainder of the talk, I'll dive into the details of these key points through several life examples. Many MATLAB users will echo these five key reasons. But global independent research analyst firm, Gartner, recognized this as well when MathWorks was named the leader for data science and machine learning platforms for 2020.

Our difference is that we understand that success demands more than just an effective model or algorithm. Many of us focus mostly on developing the algorithm, that ultimately algorithms will be used in delivering a product or a service to the market. To successfully deliver this, you need to incorporate deep learning across your entire system design workflow. It's our understanding of that necessity, and even more importantly, our family of software tools that span the entire workflow that makes the difference.

Just a quick check. Someone has mentioned that they can't hear the presentation. I just want to check that everyone can-- someone could please point out if they can hear me OK.

Can hear you just fine.

Thank you, very much. It might be just one person's speaker. Thank you. Let's get into the specifics of what I'm talking about. When I talk about a deep learning workflow, a simplified view results in four main stages, data prep, algorithms and modeling, simulation and testing, and deployment. Trail light demonstrations all go through all four parts of the workflow. Talk about some of the challenges within each stage and point out how MATLAB can make it all come together efficiently and effectively to deliver value to you.

And now moving on to the live demo piece, where we'll develop a deep learning solution in MATLAB. Today, we will be developing a deep learning algorithm that can detect objects in image and video data. As an example, we will make use of a prominent algorithm called YOLO v2, which stands for You Only Look Once, version 2.

This is a particularly good deep network for this task for a few reasons. It can detect objects in real time, which makes it versatile for tasks, such as autonomous driving and traffic monitoring. And it's faster than some other previous deep learning algorithms, such as R-CNN or Regions with Convolutional Networks. We'll step through the workflow paces and build an application from the ground up that can accomplish the task of automatically recognizing objects. We have a few slides to build us up before we jump in.

Data preparation and management turns out to be one of the most critical ingredients to success. And it's really, really hard. Today, data comes from multiple sensors and databases, structured or unstructured, spanning time with different time intervals, noisy, from different domains and so forth. The data needs to be prepared for the next stage, the algorithms and modeling stage. And it's time consuming. A great proof point, Andrej Kaparthy talked about this in 2018.

While doing these PhD work at Stanford, he did not need to spend that much time on the data. He was doing research and focused almost exclusively on creating new AI algorithms. But as director of Tesla, he spends 3/4 of his time on the data. Because now he's building a car, not a better performing producer.

You may find that you end up in the same situation, spending an enormous amount of time inside the data. MATLAB and Simulink can make that time more effective and more productive. And it's beyond just data manipulation. Data labeling is required for any kind of supervised learning algorithm. And this can be time-consuming and error-prone.

For deep learning, MATLAB provides data labeling apps, like the Image Labeler app, to help you to use techniques, such as semantic segmentation. In it, regions of an image are classified, and then that classification is automatically applied correctly throughout every frame of a video. We have other labeling apps too, all of which can be-- all of which can generate code for reuse.

Let's now move into the data preparation demo. We're now looking at my MATLAB session of release 2020 A. In it, we have a folder of vehicle images. There are approximately 300 images-- 300 images that we're going to train on. In typical scenarios, though, there may be more, thousands or if not millions. But we're going to start with 300.

Moving into the demo, I'm just going to configure my current project to make sure all my parts are working correctly. And then I'm going to-- I won't say, load the data, but we're going to point to the data that we would like to label. We're going to create pointers to our images in that folder, using what's called an image data store. So I'm going to create an image data store, which is a pointer to the files within that folder. And then I'm going to launch the Image Labeler app to do some ground truth labeling.

You can launch it via the command, Window or command, Line or by Image Labeler. Or you can go to the Apps menu tab at the top. I'm quite fortunate that I have a number of apps available to me for deep learning. And we're going to go to the Image Processing and Computer Vision section, and you can see there is Image Labeler.

A nice short by to find out what labelers are available is just to do a quick search on labeler, and there are some of those other labelers that are available. So let's go ahead and launch the Image Labeler, the images that I had in that particular folder.

It's just loading up. And you can see there's some of the image frames here. And the first thing I'm going to do with this app is start labeling some vehicles. So I'm going to find a label that says, Vehicle. Sorry. Select, OK. And if I wanted to, I could start going through each of the image frames, select a vehicle, like so.

This is a nice app that enables me to do this quite quickly. But I want to go even quicker. So I'd like some of this labeling to be even further automated for me. So I'm going to select all of the images that I've loaded in, like so. And then I'm going to go and select one of the algorithms that's built in. You can see here that I have an ACF Vehicle Detector. So I'm going to select this. There's also a People Detector. Or you could add your own algorithm. But I'm going to go ahead and select the vehicle detector.

I'll then select, Automate. And what this automate is going to do is use the inbuilt vehicle detector that's been pre-trained. And it's going to run through all of the images that I've loaded in and try and detect those vehicles and bounding boxes around them. You may find that it gets a lot of them. It may miss a couple of them. Perhaps, it hasn't been trained as much as we would like. But it's doing a pretty good job at finding and detecting a lot of those cars.

I'll stop this now. And I'll accept what we've done. Within the vehicle-- sorry, within the vehicle label summary, we could go ahead and see the number of images that we've gone through and how many cars or vehicles have been detected within them. If I'd like to go back and check some of those images and add some of my own labels, I can. But, you know, it's done partial automating for me, and it saved me a lot of time.

We can now-- if we were finished, we could go ahead and export these ground truth labels to the MATLAB workspace and select it. This is what we can do. So for this part of the demonstration, what I've highlighted is-- we've used the Image Labeler app, loading all of those images and automate and partially automate the labeling of those bounding boxes for ground truth. We're now ready to move on to the next stage, which is the training and modeling.

There was a question about the material at the end, will it become available? We will make the slides and these demos available at the end of the session. I'm also recording this and we'll make that available at some time.

Let's move on to the second step in the workflow, the one that perhaps gets the most attention, AI based algorithms and models. Within the MATLAB environment, you have direct access to common algorithms used for classification and prediction, regression to deep networks to clustering. And with MATLAB, you can easily use pre-built models developed by the broader community, such as ResNet-50 for classification or YOLO or object recognition.

While algorithms and pre-built models are a good start, it's not enough. Examples are the way engineers learn how to use algorithms and find the best approach for their specific problem. We provide a host of examples for building deep learning models in a wide range of domains. As mentioned, we have over 200 in deep learning alone.

I mentioned apps for data preparation. We also provide apps for modeling. For deep learning, we ship pre-built apps that automate the training step and provide visualizations for understanding and editing deep networks. We have the deep network designer where you can access pre-trained models, visualize an imported model and all of the layers and the parameters.

You can create your own model from scratch using a number of the building blocks from our layer library. Go ahead and analyze that model, look for warnings or errors in the connections that you could find. Then you can go ahead and input some data, set you're training options, train your model, see its performance as its training by other training progress plots, then exploit your results and generate code for reuse.

In the latest release, we have now-- we now have an app to manage experiments. It's called the Experiment Manager. Here's a short highlighting its capabilities where you can explore hyperparameters between different runs, monitor the training progress of those runs, define your custom experiments with MATLAB code, keep track of your work, and export your model results to MATLAB for further use.

Deep learning training can take a long time. Without cloud support and third party integrations, you can move the training to the appropriate complete compute platform, video GPU, public cloud, private cloud, and so forth. The point is that a single model in MATLAB can be moved to the appropriate compute platform, saving an enormous amount of development time, allowing you to leverage the right compute technology to accelerate training.

We also know that the broader deep learning community is incredibly active and prolific, and new models are coming out all the time. Because we support the ONNX standard, OONX standard, you have access to those new models and can work within the MATLAB environment.

To summarize the modeling step, within the MATLAB environment, you can have access to all of the algorithms and methods used to develop models, work with them at the code level all through an app, accelerate training to the appropriate compute platform, engage with the AI community through ONNX. Let's take a look at the modeling demo in MATLAB.

We're now going to train our YOLO v2 object detector model. We're going to configure our project. Just as an FYI, we do have some checkboxes here that allow you to do the training live or import the ONNX network live. For the purposes of the live demonstration, we're going to load one that comes from disk, just so that you don't have to sit there with a blank screen-- not with a blank screen, to sit there and wait for the results to come to be downloaded and the models to be downloaded and finish.

So let's run this forward. Next we're going to load the data set, which is the labeled ground truth outputs that we saw from the previous step. So I'll go ahead and load this. You will notice in the output here that we didn't load in the images into the workspace memory. Just go to Image File Names and the bounding boxes of where the vehicles were.

We're going to prepare our data into a training set for training the model and a test set for evaluating the detector once it's done. So we're going to use a partition command called, CV Partition, and we're going to do 85% for training 15% for testing.

In the previous example, we showed how data stores are a great way for pointing to the data that you'd like to build a model of. These are a great way to work with large data sets. We're going to leverage out our data stores to not only work with the images for training and testing but also the box labels. So within MATLAB, you'll see data stores throughout different workflows that enable you to build these pointers so you can effectively manage your data, no matter what the size.

If I'd like to interrogate that data store, I'm going to use the Read command and show you what one of those images and bounding boxes look like. I'll run that section, and you can see here there's an image with a bounding box. OK, we've got our data ready. We're now going to go ahead and create our YOLO v2 object detection network.

So YOLO v2 object detection network can be thought of having two subnetworks. It's a feature extraction network followed by a detection network. The feature extraction network typically involves using a pre-trained model, such as ResNet-50. The detection network will be the final layers that will be based on YOLO v2.

The task that we have at hand is we're going to have to perform a transfer learning task where we'll import a feature extraction network, identify a feature layer that we would like to delete, and replace it with YOLO V2, the subnetwork.

So firstly, let's go ahead and import the feature extraction network, which is ResNet-50. I could go ahead and do this from the deep network designer. So I'm going to launch one of those apps that you saw in the video. And through the date network designer, I could select one of the pre-trained networks in the case that I care about, ResNet-50. So we've got this nice gallery that allows us to select and gives us an overview of what that particular pre-trained network we can do.

However, for today's demonstration, we're not going to use the pre-trained network that you can quick and easily install by this method. We're going to import ResNet-50 from a GitHub location. Scroll down, and I'll show you where this is. The reason why we're showing you this is to show that MATLAB can play nicely with open source. So we're going to import a Pythom-based model via the GitHub URL and use the ONNX importer to bring it into MATLAB and start working with it.

So here, you can see that I'm going to import this particular ONNX network from the GitHub location. So I've loaded that in. If you want to check out what that-- sorry-- what that network looks like, we can go back to the Deep Network Designer. I'm going to load my network from the workspace. It's found that there's only one that exists. So I'll load in the base network, and then it comes. Just importing it in. And I'll zoom out. There's ResNet-50. There's a lot of layers.

So what I'm going to do is just zoom in for you so you can see them. Sorry, here. Takes a while to zoom in. Sorry about this. Zoom all the way in, and then I'll scroll across. This is the joys of doing this live on multiple screens. OK, you can start to see some of these layers. We're going to subtract or delete some of these layers for the YOLO v2 detector. We're going to replace some of those layers with the YOLO v2 blocks. And if I scroll down here, you can see that we've got some of the YOLO v2 blocks.

Rather than do this interactively in front of you, which we'll see a lot of clicking and dragging and defining our parameters, we're going to do this five by commands. So we're going to remove all the layers beyond node 140. We're going to load in the ONNX version of into it and delete it and add in the YOLO v2 subnetwork. We're going to define the YOLO v2 network, which we're doing here. So we've got some of the detection layers being defined.

For YOLO v2, there is something that we have to define called anchor boxes. This is specific for YOLO v2. You don't necessarily have to do this for other networks. But for YOLO v2 we need these. I'm not going to go into the details of what the anchor boxes are actually going to do. There is a few great documentation pages that you can refer to if you want to understand more about these. But now, you just have to understand that we have to define these anchor boxes as specific for YOLO v2.

OK, so we go ahead and do this. These anchor boxes are going to be used to define the last few layers that we need for the YOLO v2 subnetwork. Add those in, and we'll add them up. Going back into the Deep Network Designer, I'm just going to load in that particular network we've just built together. And you'll see that we'll have the YOLO v2 layers at the end. I'll try to do a bit better job zooming in. And we delete it off to 140, and you can start to see some of those YOLO v2 layers.

OK, so we've got our network ready to go ready for training. I could go ahead and start the training process from the app, but instead, just in the interest of time, I'm going to do it-- show you what it looks like from the command window. We're also not going to do the training live, because it takes approximately five minutes to do the training if I had a NVIDIA TITAN X GPU on hand. But if you'd like to see what the training looks like, we just define the training options, and we've got a one-liner for kicking off the training.

We'll load the pre-trained version we did earlier. Just loading that in. We can now test the model's accuracy. So we've loaded in a few of the images or made it available to 3D in a few of the images. And we've got these interactive slider controls that allow me to select some of these images and see how well they detect the vehicles in the image. So I'll just go through. Let's see if I can find the one that has multiple cars.

There we go, image seven. Well, no that was the one before. Hopefully you saw that. But we saw that there was multiple cars that it could detect as well. There we go. All right, testing on a few images isn't really enough. Doing effective testing of your algorithm will ensure that the accuracy and the precision will be at the level that you require once you move on to the next stage, which is deployment.

So we're going to evaluate our detector using the entire test set. So we're going to look through all of the images, and we're going to evaluate the detection precision, which is a metric that you can use to work out how precise the model-- the trained model runs. So I'm now running it over all of my images. And there's a particular plot called the precision recall curve, which allows me to test how well that the model that has been trained runs on the test data set.

Once again, if you'd like to know more about the precision recall curve, go to our documentation on the command, Evaluate Detection Precision. But in short, if you see a nice sharp square boundary on the precision curve, you're doing pretty well. And we've got a average precision, which is up close to 0.9.

So to summarize, in this particular demo, we loaded in and prepared our data ready for training. We found our own custom network, which was two southern networks that we pieced together, could use a Deep Network Designer app to do that, which we showed the commands to do that for you as well. And then after that, go ahead and trained the model and then tested that trained model on the test data set.

Let's now move back to the slides. Deep learning models have to be incorporated into a larger system to be useful. Consider automatic driving. There's a lot of algorithm work around perception systems, designing perception algorithms that can collect data from multiple senses, infuse that data into an understanding of what surrounds a vehicle.

But for those algorithms to be effective, they have to integrate with algorithms for localization, path planning, agent environment management, and controls, all working together to make sure their vehicle accelerates, decelerates, brakes, and steers perfectly in any situation. These can all be accomplished with an overall system simulation that handles, not just the perception algorithm, but the entire vehicle, itself.

Other vehicles and actors, such as pedestrians cross multiple driving scenarios in multiple weather and environmental conditions, all handled through simulation, based on the MATLAB and Simulink. In the example I just showed, we showed a minor part of that testing when we tested the images on the pre-trained auto.

For deployment, we've got a unique code generation framework that allows models developed in MATLAB to be deployed anywhere without having to rewrite the original model. This gives you the ability to test and deploy the entire system and not just the model itself.

MATLAB can generate native optimized code from multiple frameworks. It gives you the flexibility to deploy to lightweight, low power, embedded devices, such as those used in the car, the low-cost, rapid prototyping boards, such as Raspberry Pi, the edge-based IoT applications, such as sensors and controllers that are used on machineries or pieces in a factory.

MATLAB can also deploy to desktop or server environments, which can allow you to scale from desktop executables to cloud pay-- cloud based enterprise systems on AWS or Azure. Automatic co-generation eliminates coding errors and is an enormous value driver for any organization adopting it. The power and flexibility of our co-generation and deployment frameworks is unmatched.

So we'll do a stop and pause here, and I'll ask you, do you have a strategy for deployment? If so, what forms have you considered? We'll now move on to deployment demo, where I'll show you how you can take your trained model and generate embedded code for the appropriate platform.

So in this particular example, we're going to generate optimized C++ code for my CPU. However, you could change this to generate optimize a CUDA code for an embedded GPU or some of those other platforms. But because I'm running this on my PC, I'll show you how to do it on CPU.

We're just going to get the YOLO v2 network. Just loading that in. As part of the code generation process, you will have to write an entry point function. So let's go ahead and look at the entry point function, just so you can see that it doesn't require that much work.

We have a function definition where we have our input image going in and our output image going out. The input image will be the image with nothing detected. The output image will be the same image but with a bounding box of the vehicle. We use a code of command that will load in the pre-trained YOLO v2 detector, ready for code generation. We then call upon the detects from that object that we've loaded in and add the bounding box to that it's detected for the output image going out.

So the entry point function is really just a normal function that you would create for calling upon your pre-trained deep learning detector with a couple of CUDA function definitions for training.

OK, so when you've got this entry point function developed, next is let's generate the optimized C++ code. And this is what it looks like. There's a couple of commands that define the output and the target language of the configuration, the target language, if we want to include MATLAB source code comments into the C++ code and some other configurations for deep learning. And then we go ahead and run Cogen.

We also have a co-generation app if you'd like to use that as well. For now, we've just got the commands to highlight to you. This does take a few moments to generate the code. So I'll show you what that code looks like. The main thing to look for is we have a mixed file. So this is the file that we're going to call to run the C++ code. What does the full code base look like?

So here in my workspace is all the code that was generated. Looks like there's quite a bit. For that reason, we've got a report that is outputted from the code generation process that when I load, it it's a nice way to navigate the code that's being generated. Here you can see the MATLAB function that we're building the C++ code on. We have the summary of the code generation. Now we can get some areas outputted there and debug, if necessary.

I'll go down and go to the YOLO v2 detection C++ code, I was going to say, if you want to see the MEX code. So this is the MEX source code for calling it. And if I scroll down, now we've got some functions that'll be called or YOLO v2 in network detect. Let's just give you a glance of what that looks like.

So here we are into YOLO v2 network. And here's all of the lines of code that have been automatically generated for YOLO v2 C++. So it goes ahead and generates that automatically for you. In a nice report. Now, I'd like to test this automatically generated code.

So I have an MP4 video of a car running on a highway. And we have this MP4, so let's go ahead and load that into MATLAB. And we're going to loop through and run the YOLO v2 MEX file that was just shown that was pre-built and see this detector in action.

So the detector will kick in once the car reaches an appropriate distance. You can see that there's a vehicle. We'll let it run for a few models longer. You can see that it's detecting the vehicle. When we change lane, and it'll start detecting more vehicles, like so. So we now have a C++ version of our detector running against video.

We'll now move on to MathWorks deep learning support. Beyond performing the workflow in MATLAB, MathWorks is your partner that can enable you to take advantage of disruptive technologies, like deep learning. Your success is our success. And we plan to partner with you as you look to develop your deep learning driven systems.

MathWorks has engineers who enable you to easily onboard new hires from different industries or new college graduates. We have engineers who can provide hands-on sessions to demonstrate a variety of technical concepts for our on-site workshops. We can work with you and your data to guide you through how deep learning could unlock value within your organization for our guided evaluations. We can customize services based on your needs, to optimize your investment and ensure your success through our consulting services.

We can take your critical feedback, so that they can provide-- that we can provide you with the fastest, most productive engineering platform available via our technical support. Please let us know if you're interested in engaging further on any of these options.

To summarize, on why MATLAB and MathWorks for deep learning. MATLAB is focused to work towards engineering and science workflows. MATLAB is a platform that covers the entire workflow where users can improve productivity by using interactive apps that expedite analysis and also generate reusable code. Models can be deployed anywhere, from embedded to cloud systems.

MATLAB has interoperability with open source frameworks, TensorFlow and PyTorch. And users have access to support from experienced MathWorks engineers in development, training, and consulting.

If you'd like to take the next step with us, here are a number of resources for further learning. We do have a free two hour online tutorial called an Onramp to help get you started. It doesn't require a MATLAB license. You simply go to the MATLAB Onramp, click, and it will launch it through a browser in a nice interactive easy to follow way.

For larger groups, we can also deliver a deep learning workshop where you will get an online session of MATLAB with a GPU. So it goes into a little bit more depth. And right now, we're offering this virtually. And a number of you, through that registration link, I have already signed up to this online workshop.

For extended learning, we do have a 16 hour in-depth course available. It's available self-paced, online, as well as instructor lead. Now, for teaching, we've worked with leading university and colleges in supporting them develop curriculum for deep learning. Visit this page to see or read already developed courses and to reach out to us if you're interested in collaborating further on your own course development.

And finally, where can you get the content that we showed today? We do have an URL here to go to get GitHub repo, where you can download both the presentation as a PDF and also the demonstration code. So you can run the code yourself and also go into the details of the code, see what the particulars are. You can load it via this tiny URL, or if you take a photo with your phone, it will also launch the GitHub page that you see here on the right.

If all if this is a bit too complicated, simply go to-- simply go to Google and search MATLAB deep learning GitHub, and you'll find a library of all of our deep learning repos and look for the one that says object detection.

One thing I'll personally ask is if you do like the content and you did like the presentation, please star the repo within GitHub. This would be a nice thank you for enjoying the content. At this point, we're at the end of the presentation. I'd like to say thank you for coming.