An Expert’s Guide to Using MATLAB and Simulink for FPGA and SoC Design

Overview

An Expert’s Guide to Using MATLAB and Simulink for FPGA and SoC Design

Join us to learn how Adam Taylor, noted expert in Embedded Systems and FPGAs, uses MATLAB and Simulink with C and HDL code generation to design and develop space and automotive applications.

Using Model-Based Design, Adam will show algorithm development through hardware implementation, demonstrating how to optimize implementations and reduce development time. Attendees will learn how to use modeling and simulation in MATLAB and Simulink to speed-up their development process by identifying design issues early in the design cycle, then using automatic C and HDL code generation from models to deploying designs to FPGA and SoC hardware. Adam will also cover how to verify that implementations satisfy project requirements using automated methods.

Highlights

In this webinar, you will learn how to:

- Apply Model-Based Design to modeling and simulation of digital designs for targeting FPGA and SoC hardware.

- Analyze hardware designs and optimize latency, throughput, and resource usage.

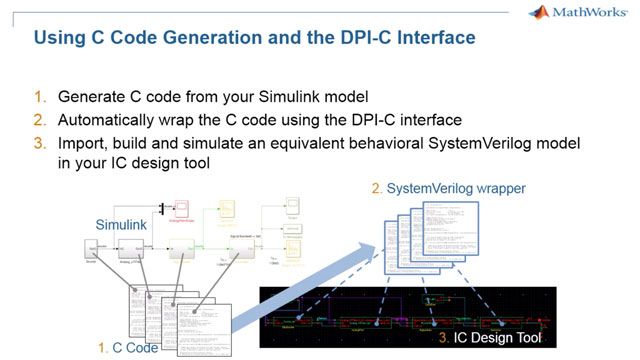

- Generate readable, synthesizable VHDL, Verilog, and SystemVerilog for FPGA and SoC implementation.

- Deploy on FPGA and SoC hardware.

- Test and verify hardware implementations.

About the Presenters

Adam Taylor

Founder and Principal Consultant, Adiuvo Engineering

Adam Taylor is a chartered engineer and fellow of the Institute of Engineering and Technology based in Harlow, United Kingdom. Over his multi-decade career, he has had experience within the public and private sector, developing FPGA-based solutions for a range of applications including RADAR, nuclear reactors, satellites, cryptography, and image processing. Education is his deepest passion, and he has delivered thousands of hours' worth of training to corporate clients and casual hardware enthusiasts alike.

Adam is also the author of the MicroZed Chronicles a weekly blog upon FPGA / SoC Development.

Stephan van Beek

Application Engineering Specialist EMEA for FPGA/SoC/ASIC, MathWorks

Stephan van Beek brings over 15 years of experience at MathWorks, Eindhoven, as a technical specialist addressing the Systems Engineering and Embedded Systems (i.e., FPGA and SoC) landscape. Stephan works with customers across Europe to apply the principles of Model-Based Systems Engineering with Model-Based Design.

Prior to joining MathWorks, Stephan was a member of the electronic design methodology team at Océ-Nederland, where he focused on enhancing design workflows. His professional journey also includes a chapter at Anorad Europe BV, delving into the intricacies of motion control systems. Stephan earned his B.Sc. degree in Electrical Engineering from the Eindhoven University of Applied Sciences.

Tom Richter

Application Engineering Specialist EMEA for FPGA/SoC/ASIC, MathWorks

Tom Richter joined MathWorks in Germany in 2011, where he worked for 10 years as a Training Engineer for Model-Based Design, signal processing, communications, and code generation. With a focus on ASIC and FPGA design, he developed MathWorks training courses for HDL code generation and hardware/software co-design. In 2021, Tom became an application engineer specializing in HDL and System-on-Chip applications, where he works with a variety of customers and applications.

Tom holds a Master of Engineering degree from the University of Ulster in Belfast and a Diploma of Electrical Engineering from the University of Applied Sciences in Augsburg.

Recorded: 29 Oct 2024